Using Native Math Libraries to Accelerate Spark Machine Learning Applications

by Zuling Kang

Spark ML is one of the dominant frameworks for many major machine learning algorithms, such as the Alternating Least Squares (ALS) algorithm for recommendation systems, the Principal Component Analysis algorithm, and the Random Forest algorithm. However, frequent misconfiguration means the potential of Spark ML is seldom fully utilized. Using native math libraries for Spark ML is a method to achieve that potential.

This article discusses how to accelerate model training speed by using native libraries for Spark ML. It also discusses why Spark ML benefits from native libraries, how to enable native libraries with CDH Spark, and provides performance comparisons between Spark ML on different native libraries.

Native Math Libraries for Spark ML

Spark’s MLlib uses the Breeze linear algebra package, which depends on netlib-java for optimized numerical processing. netlib-java is a wrapper for low-level BLAS, LAPACK, and ARPACK libraries. However, due to licensing issues with runtime proprietary binaries, neither the Cloudera distribution of Spark nor the community version of Apache Spark includes the netlib-java native proxies by default. So if you make no manual configuration, netlib-java only uses the F2J library, a Java-based math library that is translated from Fortran77 reference source code.

To check whether you are using native math libraries in Spark ML or the Java-based F2J, use the Spark shell to load and print the implementation library of netlib-java. For example, the following commands return information on the BLAS library and include that it is using F2J in the line, com.github.fommil.netlib.F2jBLAS, which is bolded below:

scala> import com.github.fommil.netlib.BLAS

import com.github.fommil.netlib.BLAS

scala> println(BLAS.getInstance().getClass().getName())

18/12/10 01:07:06 WARN netlib.BLAS: Failed to load implementation from: com.github.fommil.netlib.NativeSystemBLAS

18/12/10 01:07:06 WARN netlib.BLAS: Failed to load implementation from: com.github.fommil.netlib.NativeRefBLAS

com.github.fommil.netlib.F2jBLAS

Adoption of Native Libraries in Spark ML

Anand Iyer and Vikram Saletore showed in their engineering blog post that native math libraries like OpenBLAS and Intel’s Math Kernel Library (MKL) accelerate the training performance of Spark ML. However, the range of acceleration varies from model to model.

As for the matrix factorization model used in recommendation systems (the Alternating Least Squares (ALS) algorithm), both OpenBLAS and Intel’s MKL yield model training speeds that are 4.3 times faster than with the F2J implementation. Others, like the Latent Dirichlet Allocation (LDA), the Primary Component Analysis (PCA), and the Singular Value Decomposition (SVD) algorithms show 56% to 72% improvements for Intel MKL, and 10% to 50% improvements for OpenBLAS.

However, the blog post also demonstrates that there are some algorithms, like Random Forest and Gradient Boosted Tree, that receive almost no speed acceleration after enabling OpenBLAS or MKL. The reasons for this are largely that the training set of these tree-based algorithms are not vectors. This indicates that the native libraries, either OpenBLAS or MKL, adapt better to algorithms whose training sets can be operated on as vectors and are computed as a whole. It is more effective to use math acceleration for algorithms that operate on training sets using matrix operations.

How To Enable Native Libraries

The following sections show how to enable libgfortran and MKL for Spark ML. CDH 5.15 on RHEL 7.4 was used in the example and to produce the performance comparisons. The same functionality is available in CDH 6.x.

Enabling the libgfortran Native Library

-

Enable the libgfortran 4.8 library on every CDH node. For example, in RHEL, run the following command on each node:

yum -y install libgfortran -

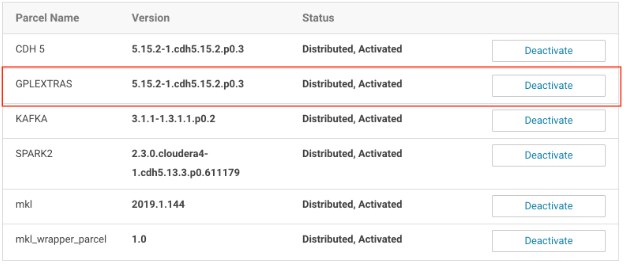

Install the GPLEXTRAS parcel in Cloudera Manager, and activate it:

- To install the GPLEXTRAS parcel, see Installing the GPL Extras Parcel in the Cloudera Manager documentation.

- To activate the package, see Activating a Parcel.

-

After activating the GPLEXTRAS parcel, in Cloudera Manager, navigate to to confirm that the GPLEXTRAS parcel is activated:

The GPLEXTRAS parcel acts as the wrapper for libgfortran.

- Restart the appropriate CDH services as guided by Cloudera Manager.

-

As soon as the restart is complete, use the Spark shell to verify that the native library is being loaded by using the following commands:

scala> import com.github.fommil.netlib.BLAS import com.github.fommil.netlib.BLAS scala> println(BLAS.getInstance().getClass().getName()) 18/12/23 06:29:45 WARN netlib.BLAS: Failed to load implementation from: com.github.fommil.netlib.NativeSystemBLAS 18/12/23 06:29:45 INFO jni.JniLoader: successfully loaded /tmp/jniloader6112322712373818029netlib-native_ref-linux-x86_64.so com.github.fommil.netlib.NativeRefBLASYou might be wondering why there is still a warning message about NativeSystemBLAS failing to load. Don’t worry about this. It is there because we are only setting the native library that Spark uses not for system-wide use. You can safely ignore this warning.

Enabling the Intel MKL Native Library

-

Intel provides the MKL native library as a Cloudera Manager parcel on its website. You can add it as a remote parcel repository in Cloudera Manager. Then you can download the library and activate it:

- In Cloudera Manager, navigate to .

- Select Configuration.

-

In the section, Remote Parcel Repository URLs, click the plus sign and add the following URL:

http://parcels.repos.intel.com/mkl/latest - Click Save Changes, and then you are returned to the page that lists available parcels.

-

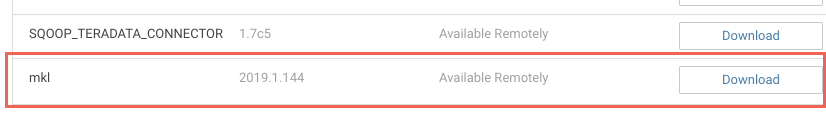

Click Download for the mkl parcel:

- Click Distribute, and when it finishes distributing to the hosts on your cluster, click Activate.

-

The MKL parcel is only composed of Linux shared library files (.so files), so to make it accessible to the JVM, a JNI wrapper has to be made. To make the wrapper, use the following MKL wrapper parcel. Use the same procedure described in Step 1 to add the following link to the Cloudera Manager parcel configuration page, download the parcel, distribute it among the hosts and then activate it:

https://raw.githubusercontent.com/Intel-bigdata/mkl-wrappers-parcel-repo/master/ - Restart the corresponding CDH services as guided by Cloudera Manager, and redeploy the client configuration if needed.

-

In Cloudera Manager, add the following configuration information into the Spark Client Advanced Configuration Snippet (Safety Valve) for spark-conf/spark-defaults.conf:

spark.driver.extraJavaOptions=-Dcom.github.fommil.netlib.BLAS=com.intel.mkl.MKLBLAS -Dcom.github.fommil.netlib.LAPACK=com.intel.mkl.MKLLAPACK spark.driver.extraClassPath=/opt/cloudera/parcels/mkl_wrapper_parcel/lib/java/mkl_wrapper.jar spark.driverEnv.MKL_VERBOSE=1 spark.executor.extraJavaOptions=-Dcom.github.fommil.netlib.BLAS=com.intel.mkl.MKLBLAS -Dcom.github.fommil.netlib.LAPACK=com.intel.mkl.MKLLAPACK spark.executor.extraClassPath=/opt/cloudera/parcels/mkl_wrapper_parcel/lib/java/mkl_wrapper.jar spark.executorEnv.MKL_VERBOSE=1This configuration information instructs the Spark application to load the MKL wrapper and use MKL as the default native library for Spark ML.

-

Open the Spark shell again to verify the native library, and you should see the following output:

scala> import com.github.fommil.netlib.BLAS import com.github.fommil.netlib.BLAS scala> println(BLAS.getInstance().getClass().getName()) com.intel.mkl.MKLBLAS

Performance Comparisons

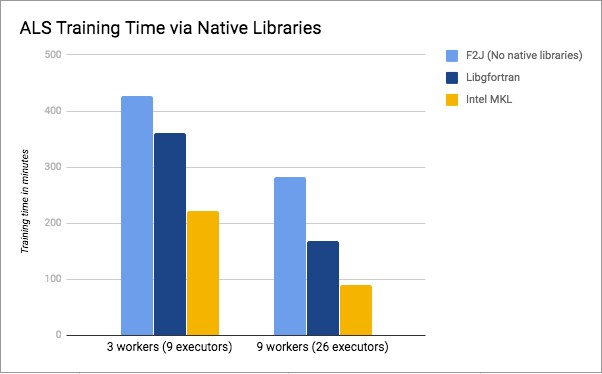

In this section, we use the ALS algorithm to compare the training speed with different underlying math libraries, including F2J, libgfortran, and Intel’s MKL.

The hardware we are using are the r4.large VM instances from Amazon EC2, with 2 CPU cores and 15.25 GB of memory for each instance. In addition, we are using CentOS 7.5 and CDH 5.15.2 with the Cloudera Distribution of Spark 2.3 Release 4. The training code is taken from the core part of the ALS chapter of Advanced Analytics with Spark (2nd Edition) by Sandy Ryza, et al, O’Reilly (2017). The training data set is the one published by Audioscrobbler, which can be downloaded at:

https://storage.googleapis.com/aas-data-sets/profiledata_06-May-2005.tar.gz

Usually the rank of the ALS model is set to a much larger value than the default of 10, so we use the value of 200 here to make sure that the result is closer to real world examples. Below is the code used to set the parameter values for our ALS model:

val model = new ALS().

setSeed(Random.nextLong()).

setImplicitPrefs(true).

setRank(200).

setRegParam(0.01).

setAlpha(1.0).

setMaxIter(20).

setUserCol("user").

setItemCol("artist").

setRatingCol("count").

setPredictionCol("prediction")

The following table and figure shows the training time when using different native libraries of Spark ML. The values are shown in minutes. We can see that both libgfortran and Intel's MKL do improve the performance of training speed, and MKL seems to outperform even more. From these experimental results, libgfortran improves by 18% to 68%, while MKL improves by 92% to 213%.

| # of Workers/Executors | F2J | libgfortran | Intel MKL |

|---|---|---|---|

| 3 workers (9 executors) | 426 | 360 | 222 |

| 9 workers (26 executors) | 282 | 168 | 90 |

Acknowledgement

Many thanks to Alex Bleakley and Ian Buss of Cloudera for their helpful advice and for reviewing this article!

About the Author

Zuling Kang is a Senior Solutions Architect at Cloudera, Inc., and holds a Ph.D. in Computer Science. Before joining Cloudera, he worked as an architect of big data systems at China Mobile Zhejiang Co., Ltd. Currently, he has published nine academic/technical papers, of which seven are indexed by the Science Citation Index (SCI)/Ei Compendex (formerly the Engineering Index). One of these papers, "Performance-Aware Cloud Resource Allocation via Fitness-Enabled Auction," is published in the "IEEE Transactions on Parallel and Distributed Systems." Zuling's current research and engineering interests include architectures for big data platforms, big data processing technologies, and machine learning.