Cloudera Security Bulletins

This topic lists the security bulletins that have been released to address vulnerabilities in Cloudera Enterprise and its components.

- Cloudera Enterprise

- Cloudera Data Science Workbench

- Apache Hadoop

- Apache HBase

- Apache Hive

- Hue

- Apache Impala

- Apache Kafka

- Cloudera Manager

- Cloudera Navigator

- Cloudera Navigator Key Trustee

- Apache Oozie

- Cloudera Search

- Apache Sentry

- Apache Spark

- Cloudera Distribution of Apache Spark 2

- Apache ZooKeeper

Cloudera Enterprise

This section lists security bulletins for vulnerabilities that potentially affect the entire Cloudera Enterprise product suite. Bulletins specific to a component, such as Cloudera Manager, Impala, Spark etc., can be found in the sections that follow.

Potentially Sensitive Information in Cloudera Diagnostic Support Bundles

Cloudera Manager transmits certain diagnostic data (or "bundles") to Cloudera. These diagnostic bundles are used by the Cloudera support team to reproduce, debug, and address technical issues for customers.

Cloudera support discovered that potentially sensitive data may be included in diagnostic bundles and transmitted to Cloudera. This sensitive data cannot be used by Cloudera for any purpose.

Cloudera has modified Cloudera Manager so that known sensitive data is redacted from the bundles before transmission to Cloudera. Work is in progress in Cloudera CDH components to remove logging and output of known potentially sensitive properties and configurations.

See Cloudera Manager Release Notes, specifically, What's New in Cloudera Manager 5.9.0 for more information (scroll to Diagnostic Bundles). Also see Sensitive Data Redaction in the Cloudera Security Guide for more information about bundles and redaction.

Cloudera strives to establish and follow best practices for the protection of customer information. Cloudera continually reviews and improves security practices, infrastructure, and data-handling policies.

Products affected: Cloudera CDH and Enterprise Editions

Releases affected: All Cloudera CDH and Enterprise Edition releases lower than 5.9.0

Users affected: All users

Date/time of detection: June 20th, 2016

Severity (Low/Medium/High): Medium

Impact: Possible logging and transmission of sensitive data

CVE: CVE-2016-5724

Immediate action required: Upgrade to Cloudera CDH and Enterprise Editions 5.9

Addressed in release/refresh/patch: Cloudera CDH and Enterprise Editions 5.9 and higher

For updates about this issue, see the Cloudera Knowledge article, TSB 2016-166: Potentially Sensitive Information in Cloudera Diagnostic Support Bundles.

Apache Commons Collections Deserialization Vulnerability

Cloudera has learned of a potential security vulnerability in a third-party library called the Apache Commons Collections. This library is used in products distributed and supported by Cloudera (“Cloudera Products”), including core Apache Hadoop. The Apache Commons Collections library is also in widespread use beyond the Hadoop ecosystem. At this time, no specific attack vector for this vulnerability has been identified as present in Cloudera Products.

In an abundance of caution, we are currently in the process of incorporating a version of the Apache Commons Collections library with a fix into the Cloudera Products. In most cases, this will require coordination with the projects in the Apache community. One example of this is tracked by HADOOP-12577.

The Apache Commons Collections potential security vulnerability is titled “Arbitrary remote code execution with InvokerTransformer” and is tracked by COLLECTIONS-580. MITRE has not issued a CVE, but related CVE-2015-4852 has been filed for the vulnerability. CERT has issued Vulnerability Note #576313 for this issue.

Cloudera Products affected:Cloudera Manager, Cloudera Navigator, Cloudera Director, CDH

Releases affected:CDH 5.5.0, CDH 5.4.8 and lower, Cloudera Manager 5.5.0, Cloudera Manager 5.4.8 and lower, Cloudera Navigator 2.4.0, Cloudera Navigator 2.3.8 and lower, Director 1.5.1 and lower

Users affected: All

Date/time of detection: Nov 7, 2015

Severity (Low/Medium/High): High

Impact: This potential vulnerability might enable an attacker to run arbitrary code from a remote machine without requiring authentication.

Immediate action required: Upgrade to the latest suitable version containing this fix when it is available.

Addressed in release/refresh/patch: Beginning with CDH 5.5.1, 5.4.9, and 5.3.9, Cloudera Manager 5.5.1, 5.4.9, and 5.3.9, Cloudera Navigator 2.4.1, 2.3.9 and 2.2.9, and Director 1.5.2, the new Apache Commons Collections library version is included in all Cloudera products.

Heartbleed Vulnerability in OpenSSL

The Heartbleed vulnerability is a serious vulnerability in OpenSSL as described at http://heartbleed.com/ (OpenSSL TLS heartbeat read overrun, CVE-2014-0160). Cloudera products do not ship with OpenSSL, but some components use this library. Customers using OpenSSL with Cloudera products need to update their OpenSSL library to one that doesn’t contain the vulnerability.

- All versions of OpenSSL 1.0.1 prior to 1.0.1g

- Hadoop Pipes uses OpenSSL.

- If SSL encryption is enabled for Impala's RPC implementation (by setting --ssl_server_certificate). This applies to any of the three Impala demon processes: impalad, catalogd and statestored.

- If HTTPS is enabled for Impala’s debug web server pages (by setting --webserver_certificate_file). This applies to any of the three Impala demon processes: impalad, catalogd and statestored.

- If HTTPS is used with Hue.

- Cloudera Manager agents, with TLS turned on, will use OpenSSL.

- All users of the above scenarios.

Severity: High (If using the scenarios above)

CVE: CVE-2014-0160

- Ensure your Linux distribution version does not have the vulnerability.

“POODLE” Vulnerability on SSL/TLS enabled ports

The POODLE (Padding Oracle On Downgraded Legacy Encryption) attack, announced by Bodo Möller, Thai Duong, and Krzysztof Kotowicz at Google, forces the use of the obsolete SSLv3 protocol and then exploits a cryptographic flaw in SSLv3. The result is that an attacker on the same network as the victim can potentially decrypt parts of an otherwise encrypted channel.

SSLv3 has been obsolete, and known to have vulnerabilities, for many years now, but its retirement has been slow because of backward-compatibility concerns. SSLv3 has in the meantime been replaced by TLSv1, TLSv1.1, and TLSv1.2. Under normal circumstances, the strongest protocol version that both sides support is negotiated at the start of the connection. However, an attacker can introduce errors into this negotiation and force a fallback to the weakest protocol version -- SSLv3.

The only solution to the POODLE attack is to completely disable SSLv3. This requires changes across a wide variety of components of CDH, and in Cloudera Manager.

Products affected: Cloudera Manager and CDH.

- Cloudera Manager and CDH 5.2.1

- Cloudera Manager and CDH 5.1.4

- Cloudera Manager and CDH 5.0.5

- CDH 4.7.1

- Cloudera Manager 4.8.5

Users affected: All users

Date and time of detection: October 14th, 2014.

Severity: (Low/Medium/High): Medium. NIST rates the severity at 4.3 out of 10 .

Impact: Allows unauthorized disclosure of information; allows component impersonation.

CVE: CVE-2014-3566

- If you are running Cloudera Manager and CDH 5.2.0, upgrade to Cloudera Manager and CDH 5.2.1

- If you are running Cloudera Manager and CDH 5.1.0 through 5.1.3, upgrade to Cloudera Manager and CDH 5.1.4

- If you are running Cloudera Manager and CDH 5.0.0 through 5.0.4, upgrade to Cloudera Manager and CDH 5.0.5

- If you are running a CDH version earlier than 4.7.1, upgrade to CDH 4.7.1

- If you are running a Cloudera Manager version earlier than 4.8.5, upgrade to Cloudera Manager 4.8.5

Cloudera Data Science Workbench

This section lists the security bulletins that have been released for Cloudera Data Science Workbench.

- TSB-349: SQL Injection Vulnerability in Cloudera Data Science Workbench

- TSB-350: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

- TSB-351: Unauthorized Project Access in Cloudera Data Science Workbench

- TSB-346: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

- TSB-328: Unauthenticated User Enumeration in Cloudera Data Science Workbench

- TSB-313: Remote Command Execution and Information Disclosure in Cloudera Data Science Workbench

- TSB-248: Privilege Escalation and Database Exposure in Cloudera Data Science Workbench

TSB-349: SQL Injection Vulnerability in Cloudera Data Science Workbench

An SQL injection vulnerability was found in Cloudera Data Science Workbench. This would allow any authenticated user to run arbitrary queries against CDSW’s internal database. The database contains user contact information, bcrypt-hashed CDSW passwords (in the case of local authentication), API keys, and stored Kerberos keytabs.

Products affected: Cloudera Data Science Workbench (CDSW)

Releases affected: CDSW 1.4.0, 1.4.1, 1.4.2

Users affected: All

Date/time of detection: 2018-10-18

Detected by: Milan Magyar (Cloudera)

Severity (Low/Medium/High): Critical (9.9): CVSS:3.0/AV:N/AC:L/PR:L/UI:N/S:C/C:H/I:H/A:H

Impact: An authenticated CDSW user can arbitrarily access and modify the CDSW internal database. This allows privilege escalation in CDSW, kubernetes, and the linux host; creation, deletion, modification, and exfiltration of data, code, and credentials; denial of service; and data loss.

CVE: CVE-2018-20091

Immediate action required:

-

Strongly consider performing a backup before beginning. We advise you to have a backup before performing any upgrade and before beginning this remediation work.

-

Upgrade to Cloudera Data Science Workbench 1.4.3 (or higher).

-

In an abundance of caution Cloudera recommends that you revoke credentials and secrets stored by CDSW. To revoke these credentials:

-

Change the password for any account with a keytab or kerberos credential that has been stored in CDSW. This includes the Kerberos principals for the associated CDH cluster if entered on the CDSW “Hadoop Authentication” user settings page.

-

With Cloudera Data Science Workbench 1.4.3 running, run the following remediation script on each CDSW node, including the master and all workers: Remediation Script for TSB-349

Note: Cloudera Data Science Workbench will become unavailable during this time.

- The script performs the following actions:

-

If using local user authentication, logs out every user and resets their CDSW password.

-

Regenerates or deletes various keys for every user.

-

Resets secrets used for internal communications.

-

-

Fully stop and start Cloudera Data Science Workbench (a restart is not sufficient).

-

For CSD-based deployments, restart the CDSW service in Cloudera Manager.

OR

-

For RPM-based deployments, run cdsw stop followed by cdsw start on the CDSW master node.

-

-

If using internal TLS termination: revoke and regenerate the CDSW TLS certificate and key.

-

For each user, revoke the previous CDSW-generated SSH public key for git integration on the git side (the private key in CDSW has already been deleted). A new SSH key pair has already been generated and should be installed in the old key’s place.

-

Revoke and regenerate any credential stored within a CDSW project, including any passwords stored in projects’ environment variables.

-

-

Verify all CDSW settings to ensure they are unchanged (e.g. SMTP server, authentication settings, custom docker images, host mounts, etc).

-

Treat all CDSW hosts as potentially compromised with root access. Remediate per your policy.

Addressed in release/refresh/patch: Cloudera Data Science Workbench 1.4.3

For the latest update on this issue see the corresponding Knowledge article:

TSB-350: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

Stopping Cloudera Data Science Workbench involves unmounting the NFS volumes that store CDSW project directories and then cleaning up a folder where CDSW stores its temporary state. However, due to a race condition, this NFS unmount process can take too long or fail altogether. If this happens, any CDSW projects that remain mounted will be deleted.

TSB-2018-346 was released in the time-frame of CDSW 1.4.2 to fix this issue, but it only turned out to be a partial fix. With CDSW 1.4.3, we have fixed the issue permanently. However, the script that was provided with TSB-2018-346 still ensures that data loss is prevented and must be used to shutdown/restart all the affected CDSW released listed below. The same script is also available under the Immediate Action Required section below.

Products affected: Cloudera Data Science Workbench

-

1.0.x

-

1.1.x

-

1.2.x

-

1.3.0, 1.3.1

-

1.4.0, 1.4.1, 1.4.2

Users affected: This potentially affects all CDSW users.

Detected by: Nehmé Tohmé (Cloudera)

Severity (Low/Medium/High): High

Impact: Potential data loss.

CVE: N/A

Immediate action required: If you are running any of the affected Cloudera Data Science Workbench versions, you must run the following script on the CDSW master node every time before you stop or restart Cloudera Data Science Workbench. Failure to do so can result in data loss.

This script should also be run before initiating a Cloudera Data Science Workbench upgrade. As always, we recommend creating a full backup prior to beginning an upgrade.

cdsw_protect_stop_restart.sh - Available for download at: cdsw_protect_stop_restart.sh.

#!/bin/bash set -e cat << EXPLANATION This script is a workaround for Cloudera TSB-346 and TSB-350. It protects your CDSW projects from a rare race condition that can result in data loss. Run this script before stopping the CDSW service, irrespective of whether the stop precedes a restart, upgrade, or any other task. Run this script only on the master node of your CDSW cluster. You will be asked to specify a target folder on the master node where the script will save a backup of all your project files. Make sure the target folder has enough free space to accommodate all of your project files. To determine how much space is required, run 'du -hs /var/lib/cdsw/current/projects' on the CDSW master node. This script will first back up your project files to the specified target folder. It will then temporarily move your project files aside to protect against the data loss condition. At that point, it is safe to stop the CDSW service. After CDSW has stopped, the script will move the project files back into place. Note: This workaround is not required for CDSW 1.4.3 and higher. EXPLANATION read -p "Enter target folder for backups: " backup_target echo "Backing up to $backup_target..." rsync -azp /var/lib/cdsw/current/projects "$backup_target" read -n 1 -p "Backup complete. Press enter when you are ready to stop CDSW: " echo "Deleting all Kubernetes resources..." kubectl delete configmaps,deployments,daemonsets,replicasets,services,ingress,secrets,persistentvolumes,persistentvolumeclaims,jobs --all kubectl delete pods --all echo "Temporarily saving project files to /var/lib/cdsw/current/projects_tmp..." mkdir /var/lib/cdsw/current/projects_tmp mv /var/lib/cdsw/current/projects/* /var/lib/cdsw/current/projects_tmp echo -e "Please stop the CDSW service." read -n 1 -p "Press enter when CDSW has stopped: " echo "Moving projects back into place..." mv /var/lib/cdsw/current/projects_tmp/* /var/lib/cdsw/current/projects rm -rf /var/lib/cdsw/current/projects_tmp echo -e "Done. You may now upgrade or start the CDSW service." echo -e "When CDSW is running, if desired, you may delete the backup data at $backup_target"

Addressed in release/refresh/patch: This issue is fixed in Cloudera Data Science Workbench 1.4.3.

Note that you are required to run the workaround script above when you upgrade from an affected version to a release with the fix. This helps guard against data loss when the affected version needs to be shut down during the upgrade process.

For the latest update on this issue see the corresponding Knowledge article:

TSB 2019-350: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

TSB-351: Unauthorized Project Access in Cloudera Data Science Workbench

Malicious CDSW users can bypass project permission checks and gain read-write access to any project folder in CDSW.

Products affected: Cloudera Data Science Workbench

Releases affected: Cloudera Data Science Workbench 1.4.0, 1.4.1, 1.4.2

Users affected: All CDSW Users

Date/time of detection: 10/29/2018

Detected by: Che-Yuan Liang (Cloudera)

Severity (Low/Medium/High): High (8.3: CVSS:3.0/AV:N/AC:L/PR:L/UI:N/S:U/C:H/I:H/A:L)

Impact: Project data can be read or written (changed, destroyed) by any Cloudera Data Science Workbench user.

CVE: CVE-2018-20090

Immediate action required:

Upgrade to a version of Cloudera Data Science Workbench with the fix (version 1.4.3, 1.5.0, or higher).

Addressed in release/refresh/patch: Cloudera Data Science Workbench 1.4.3 (and higher)

For the latest update on this issue see the corresponding Knowledge article:

TSB 2019-351: Unauthorized Project Access in Cloudera Data Science Workbench

TSB-346: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

Stopping Cloudera Data Science Workbench involves unmounting the NFS volumes that store CDSW project directories and then cleaning up a folder where the kubelet stores its temporary state. However, due to a race condition, this NFS unmount process can take too long or fail altogether. If this happens, CDSW projects that remain mounted will be deleted by the cleanup step.

Products affected: Cloudera Data Science Workbench

-

1.0.x

-

1.1.x

-

1.2.x

-

1.3.0, 1.3.1

-

1.4.0, 1.4.1

Users affected: This potentially affects all CDSW users.

Detected by: Nehmé Tohmé (Cloudera)

Severity (Low/Medium/High): High

Impact: If the NFS unmount fails during shutdown, data loss can occur. All CDSW project files might be deleted.

CVE: N/A

Immediate action required: If you are running any of the affected Cloudera Data Science Workbench versions, you must run the following script on the CDSW master node every time before you stop or restart Cloudera Data Science Workbench. Failure to do so can result in data loss.

This script should also be run before initiating a Cloudera Data Science Workbench upgrade. As always, we recommend creating a full backup prior to beginning an upgrade.

cdsw_protect_stop_restart.sh - Available for download at: cdsw_protect_stop_restart.sh.

#!/bin/bash set -e cat << EXPLANATION This script is a workaround for Cloudera TSB-346. It protects your CDSW projects from a rare race condition that can result in data loss. Run this script before stopping the CDSW service, irrespective of whether the stop precedes a restart, upgrade, or any other task. Run this script only on the master node of your CDSW cluster. You will be asked to specify a target folder on the master node where the script will save a backup of all your project files. Make sure the target folder has enough free space to accommodate all of your project files. To determine how much space is required, run 'du -hs /var/lib/cdsw/current/projects' on the CDSW master node. This script will first back up your project files to the specified target folder. It will then temporarily move your project files aside to protect against the data loss condition. At that point, it is safe to stop the CDSW service. After CDSW has stopped, the script will move the project files back into place. Note: This workaround is not required for CDSW 1.4.2 and higher. EXPLANATION read -p "Enter target folder for backups: " backup_target echo "Backing up to $backup_target..." rsync -azp /var/lib/cdsw/current/projects "$backup_target" read -n 1 -p "Backup complete. Press enter when you are ready to stop CDSW: " echo "Deleting all Kubernetes resources..." kubectl delete configmaps,deployments,daemonsets,replicasets,services,ingress,secrets,persistentvolumes,persistentvolumeclaims,jobs --all kubectl delete pods --all echo "Temporarily saving project files to /var/lib/cdsw/current/projects_tmp..." mkdir /var/lib/cdsw/current/projects_tmp mv /var/lib/cdsw/current/projects/* /var/lib/cdsw/current/projects_tmp echo -e "Please stop the CDSW service." read -n 1 -p "Press enter when CDSW has stopped: " echo "Moving projects back into place..." mv /var/lib/cdsw/current/projects_tmp/* /var/lib/cdsw/current/projects rm -rf /var/lib/cdsw/current/projects_tmp echo -e "Done. You may now upgrade or start the CDSW service." echo -e "When CDSW is running, if desired, you may delete the backup data at $backup_target"

Addressed in release/refresh/patch: This issue is fixed in Cloudera Data Science Workbench 1.4.2.

Note that you are required to run the workaround script above when you upgrade from an affected version to a release with the fix. This helps guard against data loss when the affected version needs to be shut down during the upgrade process.

For the latest update on this issue see the corresponding Knowledge article:

TSB 2018-346: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart

TSB-328: Unauthenticated User Enumeration in Cloudera Data Science Workbench

Unauthenticated users can get a list of user accounts of Cloudera Data Science Workbench.

Products affected: Cloudera Data Science Workbench

Releases affected: Cloudera Data Science Workbench 1.4.0 (and lower)

Users affected: All users of Cloudera Data Science Workbench 1.4.0 (and lower)

Date/time of detection: June 11, 2018

Severity (Low/Medium/High): 5.3 (Medium) CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:N/A:N

Impact: Unauthenticated user enumeration in Cloudera Data Science Workbench.

CVE: CVE-2018-15665

Immediate action required: Upgrade to the latest version of Cloudera Data Science Workbench (1.4.2 or higher).

Note that Cloudera Data Science Workbench 1.4.1 is no longer publicly available due to TSB 2018-346: Risk of Data Loss During Cloudera Data Science Workbench (CDSW) Shutdown and Restart.

Addressed in release/refresh/patch: Cloudera Data Science Workbench 1.4.2 (and higher)

For the latest update on this issue see the corresponding Knowledge article:

TSB 2018-318: Unauthenticated User Enumeration in Cloudera Data Science Workbench

TSB-313: Remote Command Execution and Information Disclosure in Cloudera Data Science Workbench

A configuration issue in Kubernetes used by Cloudera Data Science Workbench can allow remote command execution and privilege escalation in CDSW. A separate information permissions issue can cause the LDAP bind password to be exposed to authenticated CDSW users when LDAP bind search is enabled.

Products affected: Cloudera Data Science Workbench

Releases affected: Cloudera Data Science Workbench 1.3.0 (and lower)

Users affected: All users of Cloudera Data Science Workbench 1.3.0 (and lower)

Date/time of detection: May 16, 2018

Severity (Low/Medium/High): High

Impact: Remote command execution and information disclosure

CVE: CVE-2018-11215

Immediate action required: Upgrade to the latest version of Cloudera Data Science Workbench (1.3.1 or higher) and change the LDAP bind password if previously configured in Cloudera Data Science Workbench.

Addressed in release/refresh/patch: Cloudera Data Science Workbench 1.3.1 (and higher)

For the latest update on this issue see the corresponding Knowledge Base article:

TSB-248: Privilege Escalation and Database Exposure in Cloudera Data Science Workbench

Several web application vulnerabilities allow malicious authenticated Cloudera Data Science Workbench (CDSW) users to escalate privileges in CDSW. In combination, such users can exploit these vulnerabilities to gain root access to CDSW nodes, gain access to the CDSW database which includes Kerberos keytabs of CDSW users and bcrypt hashed passwords, and obtain other privileged information such as session tokens, invitations tokens, and environmental variables.

Products affected: Cloudera Data Science Workbench

Releases affected: Cloudera Data Science Workbench 1.0.0, 1.0.1, 1.1.0, 1.1.1

Users affected: All users of Cloudera Data Science Workbench 1.0.0, 1.0.1, 1.1.0, 1.1.1

Date/time of detection: September 1, 2017

Detected by: NCC Group

Severity (Low/Medium/High): High

Impact: Privilege escalation and database exposure.

CVE: CVE-2017-15536

Immediate action required: Upgrade to the latest version of Cloudera Data Science Workbench.

Addressed in release/refresh/patch: Cloudera Data Science Workbench 1.2.0 or higher.

Apache Hadoop

This section lists the security bulletins that have been released for Apache Hadoop.

- XSS Cloudera Manager

- CVE-2018-1296 Permissive Apache Hadoop HDFS listXAttr Authorization Exposes Extended Attribute Key/Value Pairs

- Hadoop YARN Privilege Escalation

- Zip Slip Vulnerability

- Apache Hadoop MapReduce Job History Server (JHS) vulnerability

- No security exposure due to CVE-2017-3162 for Cloudera Hadoop clusters

- Cross-site scripting exposure (CVE-2017-3161) not an issue for Cloudera Hadoop

- Apache YARN NodeManager Password Exposure

- Short-Circuit Read Vulnerability

- Apache Hadoop Privilege Escalation Vulnerability

- Encrypted MapReduce spill data on the local file system is vulnerable to unauthorized disclosure

- Critical Security Related Files in YARN NodeManager Configuration Directories Accessible to Any User

- Apache Hadoop Distributed Cache Vulnerability

- Some DataNode Admin Commands Do Not Check If Caller Is An HDFS Admin

- JobHistory Server Does Not Enforce ACLs When Web Authentication is Enabled

- Apache Hadoop and Apache HBase "Man-in-the-Middle" Vulnerability

- DataNode Client Authentication Disabled After NameNode Restart or HA Enable

- Several Authentication Token Types Use Secret Key of Insufficient Length

- MapReduce with Security

XSS Cloudera Manager

Malicious Impala queries can result in Cross Site Scripting (XSS) when viewed in Cloudera Manager.

Products affected: Impala

Releases affected:

- Cloudera Manager 5.13.x, 5.14.x, 5.15.1, 5.15.2, 5.16.1

- Cloudera Manager 6.0.0, 6.0.1, 6.1.0

Users affected: All Cloudera Manager Users

Date/time of detection: November 2018

Severity: High

Impact: When a malicious user generates a piece of JavaScript in the impala-shell and then goes to the Queries tab of the Impala service in Cloudera Manager, that piece of JavaScript code gets evaluated, resulting in an XSS.

CVE: CVE-2019-14449

Upgrade: Update to a version of CDH containing the fix.

Workaround: There is no workaround, upgrade to the latest available maintenance release.

- Cloudera Manager 5.16.2

- Cloudera Manager 6.0.2, 6.1.1, 6.2.0, 6.3.0

For the latest update on this issue see the corresponding Knowledge article:

CVE-2018-1296 Permissive Apache Hadoop HDFS listXAttr Authorization Exposes Extended Attribute Key/Value Pairs

HDFS exposes extended attribute key/value pairs during listXAttrs, verifying only path-level search access to the directory rather than path-level read permission to the referent.

Products affected: HDFS

Releases affected:

- CDH 5.4.1-5.15.1, 5.16.0

- CDH 6.0.0, 6.0.1, 6.1.0

Users affected: Users who store sensitive data in extended attributes, such as users of HDFS encryption.

Detected by: Rushabh Shah, Yahoo! Inc., Hadoop committer

Date/time of detection: December 12, 2017

Severity: Medium

Impact: HDFS exposes extended attribute key/value pairs during listXAttrs, verifying only path-level search access to the directory rather than path-level read permission to the referent. This affects features that store sensitive data in extended attributes.

CVE: CVE-2018-1296

Upgrade: Update to a version of CDH containing the fix.

Workaround: If a file contains sensitive data in extended attributes, users and admins need to change the permission to prevent others from listing the directory that contains the file.

- CDH 5.16.2, 5.16.1

- CDH 6.0.1, CDH 6.1.0 and higher

For the latest update on this issue see the corresponding Knowledge article:

Hadoop YARN Privilege Escalation

A vulnerability in Hadoop YARN allows a user who can escalate to the yarn user to possibly run arbitrary commands as the root user.

Products affected: Hadoop YARN

Releases affected:

- All releases prior to CDH 5.16.0

- CDH 5.16.0 and CDH 5.16.1

- CDH 6.0.0

Users affected: Users running the Hadoop YARN service.

Detected by: Cloudera

Date/time of detection: 05/07/2018

Severity: High

Impact: The vulnerability allows a user who has access to a node in the cluster running a YARN NodeManager and who can escalate to the yarn user to run arbitrary commands as the root user even if the user is not allowed to escalate directly to the root user.

CVE: CVE-2018-8029

Upgrade: Upgrade to a release where the issue is fixed.

Workaround: The vulnerability can be mitigated by restricting access to the nodes where the YARN NodeManagers are deployed, and by ensuring that only the yarn user is a member of the yarn group and can sudo to yarn. Please consult with your internal system administration team and adhere to your internal security policy when evaluating the feasibility of the above mitigation steps.

- CDH 5.16.2

- CDH 6.0.1, CDH 6.1.0 and higher

For the latest update on this issue see the corresponding Knowledge article:

TSB 2019-318: CVE-2018-8029 Hadoop YARN privilege escalation

Zip Slip Vulnerability

“Zip Slip” is a widespread arbitrary file overwrite critical vulnerability, which typically results in remote command execution. It was discovered and responsibly disclosed by the Snyk Security team ahead of a public disclosure on June 5, 2018, and affects thousands of projects.

Cloudera has analyzed our use of zip-related software, and has determined that only Apache Hadoop is vulnerable to this class of vulnerability in CDH 5. This has been fixed in upstream Hadoop as CVE-2018-8009.

Products affected: Hadoop

Releases affected:

- CDH 5.12.x and all prior releases

- CDH 5.13.0, 5.13.1, 5.13.2, 5.13.3

- CDH 5.14.0, 5.14.2, 5.14.3

- CDH 5.15.0

Users affected: All

Date of detection: April 19, 2018

Detected by: Snyk

Severity: High

Impact: Zip Slip is a form of directory traversal that can be exploited by extracting files from an archive. The premise of the directory traversal vulnerability is that an attacker can gain access to parts of the file system outside of the target folder in which they should reside. The attacker can then overwrite executable files and either invoke them remotely or wait for the system or user to call them, thus achieving remote command execution on the victim’s machine. The vulnerability can also cause damage by overwriting configuration files or other sensitive resources, and can be exploited on both client (user) machines and servers.

CVE: CVE-2018-8009

Immediate action required: Upgrade to a version that contains the fix.

Addressed in release/refresh/patch: CDH 5.14.4

For the latest update on this issue see the corresponding Knowledge article:

TSB: 2018-307: Zip Slip Vulnerability

Apache Hadoop MapReduce Job History Server (JHS) vulnerability

A vulnerability in Hadoop’s Job History Server allows a cluster user to expose private files owned by the user running the MapReduce Job History Server (JHS) process. See http://seclists.org/oss-sec/2018/q1/79 for reference.

Products affected: Apache Hadoop MapReduce

Releases affected: All releases prior to CDH 5.12.0. CDH 5.12.0, CDH 5.12.1, CDH 5.12.2, CDH 5.13.0, CDH 5.13.1, CDH 5.14.0

Users affected: Users running the MapReduce Job History Server (JHS) daemon

Date/time of detection: November 8, 2017

Detected by: Man Yue Mo of lgtm.com

Severity (Low/Medium/High): High

Impact: The vulnerability allows a cluster user to expose private files owned by the user running the MapReduce Job History Server (JHS) process. The malicious user can construct a configuration file containing XML directives that reference sensitive files on the MapReduce Job History Server (JHS) host.

CVE: CVE-2017-15713

Immediate action required: Upgrade to a release where the issue is fixed.

Addressed in release/refresh/patch: CDH 5.13.2

No security exposure due to CVE-2017-3162 for Cloudera Hadoop clusters

Information only. No action required. In the spirit of being overly cautious, CVE-2017-3162 was filed by the Apache Hadoop community to document the ability of the HDFS client (in the CDH 5.x code base) to browse the HDFS namespace without validating the NameNode as a query parameter.

This benign exposure was discovered independently by Cloudera (as well as other members of the Hadoop community) during regular routine static source code analyses. It is considered benign because there are no known attack vectors from this vulnerability.

Products affected: N/A

Releases affected: CDH 5.x and prior.

Users affected: None

Severity (Low/Medium/High): None

Impact: No impact to Cloudera customers or others running Hadoop clusters.

CVE: CVE-2017-3162

Immediate action required: No action required.

Addressed in release/refresh/patch: Not applicable.

Cross-site scripting exposure (CVE-2017-3161) not an issue for Cloudera Hadoop

Information only: No action required. A vulnerability recently uncovered by the wider security community had already been caught and resolved by Cloudera.

Products affected: Hadoop

Releases affected: CDH prior to 5.2.6 specifically the HDFS web UI would have been exposed to this vulnerability.

Users affected: None

Severity (Low/Medium/High): N/A

Impact: No impact to Cloudera customers or others running Hadoop clusters.

CVE: CVE-2017-3161

Immediate action required: No action required.

- CDH5.2.6

- CDH5.3.4, CDH5.3.5, CDH5.3.6, CDH5.3.8, CDH5.3.9, CDH5.3.10

- CDH5.4.3, CDH5.4.4, CDH5.4.5, CDH5.4.7, CDH5.4.8, CDH5.4.9, CDH5.4.10, CDH5.4.11

- CDH5.5.0 and all higher releases

Apache YARN NodeManager Password Exposure

The YARN NodeManager in Apache Hadoop may leak the password for its credential store. This credential store is created by Cloudera Manager and contains sensitive information used by the NodeManager. Any container launched by that NodeManager can gain access to the password that protects the credential store.

Examples of sensitive information inside the credential store include a keystore password and an LDAP bind user password.

The credential store is also protected by Unix file permissions. When managed by Cloudera Manager, the credential store is readable only by the yarn user and the hadoop group. As a result, the scope of this leak is mitigated, making this a Low severity issue.

Products affected: YARN

- CDH 5.4.0, 5.4.1, 5.4.2, 5.4.3, 5.4.4, 5.4.5, 5.4.7, 5.4.8, 5.4.9, 5.4.10

- CDH 5.5.0, 5.5.1, 5.5.2, 5.5.3, 5.5.4

- CDH 5.6.0, 5.6.1

- CDH 5.7.0, 5.7.1, 5.7.2

- CDH 5.8.0, 5.8.1

Users affected: Cloudera Manager users who configure YARN to connect to external services (such as LDAP) that require a password, or who have enabled TLS for YARN.

Date/time of detection: March 15, 2016

Detected by: Robert Kanter

Severity (Low/Medium/High): Low (The credential store itself has restrictive permissions.)

Impact: Potential sensitive data exposure

CVE: CVE-2016-3086

Immediate action required: Upgrade to a release in which this has been addressed or higher.

Addressed in release/refresh/patch: CDH 5.4.11, CDH 5.5.5, CDH 5.6.2, CDH 5.7.3, CDH 5.8.2

Short-Circuit Read Vulnerability

In HDFS short-circuit reads, a local user on an HDFS DataNode may be able to create a block token that grants unauthorized read access to random files by guessing certain fields in the token.

Products affected: HDFS

- CDH 5.0.0, 5.0.1, 5.0.2, 5.0.3, 5.0.4, 5.0.5, 5.0.6

- CDH 5.1.0, 5.1.2, 5.1.3, 5.1.4, 5.1.5

- CDH 5.2.0, 5.2.1, 5.2.3, 5.2.4, 5.2.5, 5.2.6

- CDH 5.3.0, 5.3.2, 5.3.3, 5.3.4, 5.3.5, 5.3.6, 5.3.8, 5.3.9, 5.3.10

- CDH 5.4.0, 5.4.1, 5.4.3, 5.4.4, 5.4.5, 5.4.7, 5.4.8, 5.4.9, 5.4.10, 5.4.11

- CDH 5.5.0, 5.5.1, 5.5.2, 5.5.4, 5.5.5, 5.5.6

- CDH 5.6.0, 5.6.1

Users affected: All HDFS users

Detected by: This issue was reported by Kihwal Lee of Yahoo Inc.

Severity (Low/Medium/High): Medium

Impact: A local user may be able to gain unauthorized read access to block data.

CVE: CVE-2016-5001

Immediate action required: Upgrade to a fixed version.

Addressed in release/refresh/patch: 5.7.0 and higher, 5.8.0 and higher, 5.9.0 and higher.

For the latest update on this issue see the corresponding Knowledge article:

Apache Hadoop Privilege Escalation Vulnerability

A remote user who can authenticate with the HDFS NameNode can possibly run arbitrary commands as the hdfs user.

See CVE-2016-5393 Apache Hadoop Privilege escalation vulnerability

Products affected: HDFS and YARN

Releases affected: CDH 5.0.0, 5.0.1, 5.0.2, 5.0.3, 5.0.4, 5.0.5, 5.0.6

CDH 5.1.0, 5.1.2, 5.1.3, 5.1.4, 5.1.5

CDH 5.2.0, 5.2.1, 5.2.3, 5.2.4, 5.2.5, 5.2.6

CDH 5.3.0, 5.3.2, 5.3.3, 5.3.4, 5.3.5, 5.3.6, 5.3.8, 5.3.9, 5.3.10

CDH 5.4.0, 5.4.1, 5.4.3, 5.4.4, 5.4.5, 5.4.7, 5.4.8, 5.4.9, 5.4.10

CDH 5.5.0, 5.5.1, 5.5.2, 5.5.4

CDH 5.6.0, 5.6.1

CDH 5.7.0, 5.7.1, 5.7.2

CDH 5.8.0

Users affected: All

Date/time of detection: July 26th, 2016

Severity (Low/Medium/High): High

Impact: A remote user who can authenticate with the HDFS NameNode can possibly run arbitrary commands with the same privileges as the HDFS service.

This vulnerability is critical because it is easy to exploit and compromises system-wide security. As a result, a remote user can potentially run any arbitrary command as the hdfs user. This bypasses all Hadoop security. There is no mitigation for this vulnerability.

CVE: CVE-2016-5393

Immediate action required: Upgrade immediately.

Addressed in release/refresh/patch: CDH 5.4.11, CDH 5.5.5, CDH 5.7.3, CDH 5.8.2, CDH 5.9.0 and higher.

Encrypted MapReduce spill data on the local file system is vulnerable to unauthorized disclosure

MapReduce spills intermediate data to the local disk. The encryption key used to encrypt this spill data is stored in clear text on the local filesystem along with the encrypted data itself. A malicious user with access to the file with these credentials can load the tokens from the file, read the key, and then decrypt the spill data.

See the upstream announcement on the Mitre site.

Products affected: MapReduce

Releases affected: CDH 5.2.0, CDH 5.2.1, CDH 5.2.3, CDH 5.2.4, CDH 5.2.5, CDH 5.2.6

CDH 5.3.0, CDH 5.3.2, CDH 5.3.3, CDH 5.3.4, CDH 5.3.5, CDH 5.3.6, CDH 5.3.8, CDH 5.3.9

CDH 5.4.0, CDH 5.4.1, CDH 5.4.3, CDH 5.4.4, CDH 5.4.5, CDH 5.4.7, CDH 5.4.8, CDH 5.4.9

CDH 5.5.0, CDH 5.5.1, CDH 5.5.2

Users affected: Users who have enabled encryption of MapReduce intermediate/spilled data to the local filesystem

Severity (Low/Medium/High): High

CVE: CVE-2015-1776

Addressed in release/refresh/patch: CDH 5.3.10, CDH 5.4.10, CDH 5.5.4; CDH 5.6.0 and higher

Immediate action required: Upgrade to one of the above releases if you use spill data encryption. This security fix causes MapReduce ApplicationMaster failures to not be tolerated when spill data is encrypted; post-upgrade, individual MapReduce jobs might fail if the ApplicationMaster goes down.

Critical Security Related Files in YARN NodeManager Configuration Directories Accessible to Any User

When Cloudera Manager starts a YARN NodeManager, it makes all files in its configuration directory (typically /var/run/cloudera-scm-agent/process) readable by all users. This includes the file containing the Kerberos keytabs (yarn.keytab) and the file containing passwords for the SSL keystore (ssl-server.xml).

Global read permissions must be removed on the NodeManager’s security-related files.

Products affected: Cloudera Manager

Releases affected: All releases of Cloudera Manager 4.0 and higher.

Users affected: Customers who are using YARN in environments where Kerberos or SSL is enabled.

Date/time of detection: March 8, 2015

Severity (Low/Medium/High): High

Impact: Any user who can log in to a host where the YARN NodeManager is running can get access to the keytab file, use it to authenticate to the cluster, and perform unauthorized operations. If SSL is enabled, the user can also decrypt data transmitted over the network.

CVE: CVE-2015-2263

- If you are running YARN with Kerberos/SSL with Cloudera Manager 5.x, upgrade to the maintenance release with the security fix. If you are running YARN with Kerberos with Cloudera Manager 4.x, upgrade to any Cloudera Manager 5.x release with the security fix.

- Delete all “yarn” and “HTTP” principals from KDC/Active Directory. After deleting them, regenerate them using Cloudera Manager.

- Regenerate SSL keystores that you are using with the YARN service, using a new password.

ETA for resolution: Patches are available immediately with the release of this TSB.

Addressed in release/refresh/patch: Cloudera Manager releases 5.0.6, 5.1.5, 5.2.5, 5.3.3, and 5.4.0 have the fix for this bug.

For further updates on this issue see the corresponding Knowledge article:

Apache Hadoop Distributed Cache Vulnerability

The Distributed Cache Vulnerability allows a malicious cluster user to expose private files owned by the user running the YARN NodeManager process. The malicious user can create a public tar archive containing a symbolic link to a local file on the host running the YARN NodeManager process.

Products affected: YARN in CDH 5.

- Cloudera Manager and CDH 5.2.1

- Cloudera Manager and CDH 5.1.4

- Cloudera Manager and CDH 5.0.5

Users affected: Users running the YARN NodeManager daemon with Kerberos authentication.

Severity: (Low/Medium/High): High.

Impact: Allows unauthorized disclosure of information.

CVE: CVE-2014-3627

- If you are running Cloudera Manager and CDH 5.2.0, upgrade to Cloudera Manager and CDH 5.2.1

- If you are running Cloudera Manager and CDH 5.1.0 through 5.1.3, upgrade to Cloudera Manager and CDH 5.1.4

- If you are running Cloudera Manager and CDH 5.0.0 through 5.0.4, upgrade to Cloudera Manager and CDH 5.0.5

Some DataNode Admin Commands Do Not Check If Caller Is An HDFS Admin

Three HDFS admin commands—refreshNamenodes, deleteBlockPool, and shutdownDatanode—lack proper privilege checks in Apache Hadoop 0.23.x prior to 0.23.11 and 2.x prior to 2.4.1, allowing arbitrary users to make DataNodes unnecessarily refresh their federated NameNode configs, delete inactive block pools, or shut down. The shutdownDatanode command was first introduced in 2.4.0 and refreshNamenodes and deleteBlockPool were added in 0.23.0. The deleteBlockPool command does not actually remove any underlying data from affected DataNodes, so there is no data loss possibility due to this vulnerability, although cluster operations can be severely disrupted.

- Hadoop HDFS

- CDH 5.0.0 and CDH 5.0.1

- All users running an HDFS cluster configured with Kerberos security

- April 30, 2014

Impact: Through HDFS admin command-line tools, non-admin users can shut down DataNodes or force them to perform unnecessary operations.

CVE: CVE-2014-0229

Immediate action required: Upgrade to CDH 5.0.2 or higher.

JobHistory Server Does Not Enforce ACLs When Web Authentication is Enabled

The JobHistory Server does not enforce job ACLs when web authentication is enabled. This means that any user can see details of all jobs. This only affects users who are using MRv2/YARN with HTTP authentication enabled.

- Hadoop

- All versions of CDH 4.5.x up to 4.5.0

- All versions of CDH 4.4.x up to 4.4.0

- All versions of CDH 4.3.x up to 4.3.1

- All versions of CDH 4.2.x up to 4.2.2

- All versions of CDH 4.1.x up to 4.1.5

- All versions of CDH 4.0.x

- CDH 5.0.0 Beta 1

- Users of YARN who have web authentication enabled.

Date/time of detection: October 14, 2013

Impact: Low

CVE: CVE-2013-6446

- None, if you are not using MRv2/YARN with HTTP authentication.

- If you are using MRv2/YARN with HTTP authentication, upgrade to CDH 4.6.0 or CDH 5.0.0 Beta 2 or contact Cloudera for a patch.

ETA for resolution: Fixed in CDH 5.0.0 Beta 2 released on 2/10/2014 and CDH 4.6.0 released on 2/27/2014.

Addressed in release/refresh/patch: CDH 4.6.0 and CDH 5.0.0 Beta 2.

Verification:

This vulnerability affects the JobHistory Server Web Services; it does not affect the JobHistory Server Web UI.

- Create two non-admin users: 'A' and 'B'

- Submit a MapReduce job as user 'A'. For example:

$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0-SNAPSHOT.jar pi 2 2

- From the output of the above submission, copy the job ID, for example: job_1389847214537_0001

- With a browser logged in to the JobHistory Server Web UI as user 'B', access the following URL:

http://<JHS_HOST>:19888/ws/v1/history/mapreduce/jobs/job_1389847214537_0001

If the vulnerability has been fixed, you should get an HTTP UNAUTHORIZED response; if the vulnerability has not been fixed, you should get an XML output with basic information about the job.

Apache Hadoop and Apache HBase "Man-in-the-Middle" Vulnerability

The Apache Hadoop and HBase RPC protocols are intended to provide bi-directional authentication between clients and servers. However, a malicious server or network attacker can unilaterally disable these authentication checks. This allows for potential reduction in the configured quality of protection of the RPC traffic, and privilege escalation if authentication credentials are passed over RPC.

- Hadoop

- HBase

- All versions of CDH 4.3.x prior to 4.3.1

- All versions of CDH 4.2.x prior to 4.2.2

- All versions of CDH 4.1.x prior to 4.1.5

- All versions of CDH 4.0.x

- Users of HDFS who have enabled Hadoop Kerberos security features and HDFS data encryption features.

- Users of MapReduce or YARN who have enabled Hadoop Kerberos security features.

- Users of HBase who have enabled HBase Kerberos security features and who run HBase co-located on a cluster with MapReduce or YARN.

Date/time of detection: June 10th, 2013

Severity: Severe

Impact:

RPC traffic from Hadoop clients, potentially including authentication credentials, may be intercepted by any user who can submit jobs to Hadoop. RPC traffic from HBase clients to Region Servers may be intercepted by any user who can submit jobs to Hadoop.

CVE: CVE-2013-2192 (Hadoop) and CVE-2013-2193 (HBase)

-

Users of CDH 4.3.0 should immediately upgrade to CDH 4.3.1 or higher.

-

Users of CDH 4.2.x should immediately upgrade to CDH 4.2.2 or higher.

-

Users of CDH 4.1.x should immediately upgrade to CDH 4.1.5 or higher.

ETA for resolution: August 23, 2013

Addressed in release/refresh/patch: CDH 4.1.5, CDH 4.2.2, and CDH 4.3.1.

Verification:

To verify that you are not affected by this vulnerability, ensure that you are running a version of CDH at or higher than the aforementioned versions. To verify that this is true, proceed as follows.

-

On RPM-based systems (RHEL, SLES) rpm -qi hadoop | grep -i version

- On Debian-based systems

dpkg -s hadoop | grep -i version

DataNode Client Authentication Disabled After NameNode Restart or HA Enable

Products affected: HDFS

Releases affected: CDH 4.0.0

Users affected: Users of HDFS who have enabled HDFS Kerberos security features.

Date vulnerability discovered: June 26, 2012

Date vulnerability analysis and validation complete: June 29, 2012

Severity: Severe

Impact: Malicious clients may gain write access to data for which they have read-only permission, or gain read access to any data blocks whose IDs they can determine.

Mechanism: When Hadoop security features are enabled, clients authenticate to DataNodes using BlockTokens issued by the NameNode to the client. The DataNodes are able to verify the validity of a BlockToken, and will reject BlockTokens that were not issued by the NameNode. The DataNode determines whether or not it should check for BlockTokens when it registers with the NameNode.

Due to a bug in the DataNode/NameNode registration process, a DataNode which registers more than once for the same block pool will conclude that it thereafter no longer needs to check for BlockTokens sent by clients. That is, the client will continue to send BlockTokens as part of its communication with DataNodes, but the DataNodes will not check the validity of the tokens. A DataNode will register more than once for the same block pool whenever the NameNode restarts, or when HA is enabled.

Immediate action required:

- Understand the vulnerability introduced by restarting the NameNode, or enabling HA.

- Upgrade to CDH 4.0.1 as soon as it becomes available.

Resolution: July 6, 2012

Addressed in release/refresh/patch: CDH 4.0.1 This release addresses the vulnerability identified by CVE-2012-3376.

Verification: On the NameNode run one of the following:

- yum list hadoop-hdfs-namenode on RPM-based systems

- dpkg -l | hadoop-hdfs-namenode on Debian-based systems

- zypper info hadoop-hdfs-namenode for SLES11

On all DataNodes run one of the following:

- yum list hadoop-hdfs-datanode on RPM-based systems

- dpkg -l | grep hadoop-hdfs-datanode on Debian-base

- zypper info hadoop-hdfs-datanode for SLES11

The reported version should be >= 2.0.0+91-1.cdh4.0.1

Several Authentication Token Types Use Secret Key of Insufficient Length

Products Affected: HDFS, MapReduce, YARN, Hive, HBase

Releases Affected: If you use MapReduce, HDFS, HBase, or YARN, CDH4.0.x and all CDH3 versions between CDH3 Beta 3 and CDH3u5 refresh 1.

Users Affected: Users who have enabled Hadoop Kerberos security features.

Date/Time of Announcement: 10/12/2012 2:00pm PDT (upstream)

Verification: Verified upstream

Severity: High

Impact: Malicious users may crack the secret keys used to sign security tokens, granting access to modify data stored in HDFS, HBase, or Hive without authorization. HDFS Transport Encryption may also be brute-forced.

Mechanism: This vulnerability impacts a piece of security infrastructure in Hadoop Common, which affects the security of authentication tokens used by HDFS, MapReduce, YARN, HBase, and Hive.

Several components in Hadoop issue authentication tokens to clients in order to authenticate and authorize later access to a secured resource. These tokens consist of an identifier and a signature generated using the well-known HMAC scheme. The HMAC algorithm is based on a secret key shared between multiple server-side components.

For example, the HDFS NameNode issues block access tokens, which authorize a client to access a particular block with either read or write access. These tokens are then verified using a rotating secret key, which is shared between the NameNode and DataNodes. Similarly, MapReduce issues job-specific tokens, which allow reducer tasks to retrieve map output. HBase similarly issues authentication tokens to MapReduce tasks, allowing those tasks to access HBase data. Hive uses the same token scheme to authenticate access from MapReduce tasks to the Hive metastore.

The HMAC scheme relies on a shared secret key unknown to the client. In currently released versions of Hadoop, this key was created with an insufficient length (20 bits), which allows an attacker to obtain the secret key by brute force. This may allow an attacker to perform several actions without authorization, including accessing other users' data.

Immediate action required: If Security is enabled, upgrade to the latest CDH release.

ETA for resolution: As of 10/12/2012, this is patched in CDH4.1.0 and CDH3u5 refresh 2. Both are available now.

Addressed in release/refresh/patch: CDH4.1.0 and CDH3u5 refresh 2

Details: CDH Downloads

MapReduce with Security

Products affected: MapReduce

Releases affected: Hadoop 1.0.1 and below, Hadoop 0.23, CDH3u0-CDH3u2, CDH3u3 containing the hadoop-0.20-sbin package, version 0.20.2+923.195 and below.

Users affected: Users who have enabled Hadoop Kerberos/MapReduce security features.

Severity: Critical

Impact: Vulnerability allows an authenticated malicious user to impersonate any other user on the cluster.

Immediate action required: Upgrade the hadoop-0.20-sbin package to version to 0.20.2+923.197 or higher on all TaskTrackers to address the vulnerability. Upgrading hadoop-0.20-sbin causes upgrade of several related (but unchanged) hadoop packages. If using Cloudera Manager versions 3.7.3 and below, you will also need to upgrade to Cloudera Manager 3.7.4 or higher before you can successfully run jobs with Kerberos enabled after upgrading the hadoop-0.20-sbin package.

Resolution: 3/21/2012

Addressed in release/refresh/patch: hadoop-0.20-sbin package, version 0.20.2+923.197 This release addresses the vulnerability identified by CVE-2012-1574.

Remediation verification: On all TaskTrackers run one of the following:

- yum list hadoop-0.20-sbin on RPM-based systems

- dpkg -l | grep hadoop-0.20-sbin on Debian-based systems

- zypper info hadoop-0.20-sbin for SLES11

The reported version should be >= 0.20.2+923.197.

If you are a Cloudera Enterprise customer and have further questions or need assistance, log a ticket with Cloudera Support through http://support.cloudera.com.

Apache HBase

This section lists the security bulletins that have been released for Apache HBase.

Incorrect User Authorization Applied by HBase REST Server

In CDH versions 6.0 to 6.1.1, authorization was incorrectly applied to users of the HBase REST server. Requests sent to the HBase REST server were executed with the permissions of the REST server itself, not with the permissions of the end-user. This problem does not affect previous CDH 5 releases.

Products affected: HBase

Releases affected: CDH 6.0.x, 6.1.0, 6.1.1

Date/time of detection: March, 2019

Users affected: Users of the HBase REST server with authentication and authorization enabled

Severity (Low/Medium/High): 7.3 (High) CVSS:3.0/AV:N/AC:L/PR:N/UI:N/S:U/C:L/I:L/A:L

Impact: Authorization was incorrectly applied to users of the HBase REST server. Requests sent to the HBase REST server were executed with the permissions of the REST server itself, instead of the permissions of the end-user. This issue is only relevant when HBase is configured with Kerberos authentication, HBase authorization is enabled, and the REST server is configured with SPNEGO authentication. This issue does not extend beyond the HBase REST server. The impact of this vulnerability is dependent on the authorizations granted to the HBase REST service user, but, typically, this user has significant authorization in HBase.

CVE: CVE-2019-0212

Immediate action required: Stop the HBase REST server to prevent any access of HBase with incorrect authorizations. Upgrade to a version of CDH with the vulnerability fixed, and restart the HBase REST service.

Addressed in release/refresh/patch: CDH 6.1.2, 6.2.0

Knowledge article: For the latest update on this issue see the corresponding Knowledge article: TSB 2019-367: Incorrect user authorization applied by HBase REST Server

Potential Privilege Escalation for User of HBase “Thrift 1” API Server over HTTP

CVE-2018-8025 describes an issue in Apache HBase that affects the optional "Thrift 1" API server when running over HTTP. There is a race-condition that could lead to authenticated sessions being incorrectly applied to users, e.g. one authenticated user would be considered a different user or an unauthenticated user would be treated as an authenticated user.

Products affected: HBase Thrift Server

- CDH 5.4.x - 5.12.x

- CDH 5.13.0, 5.13.1, 5.13.2, 5.13.3

- CDH 5.14.0, 5.14.2, 5.14.3

- CDH 5.15.0

Fixed versions: CDH 5.14.4

Users affected: Users with the HBase Thrift 1 service role installed and configured to work in “thrift over HTTP” mode. For example, those using Hue with HBase impersonation enabled.

Severity (Low/Medium/High): High

Potential privilege escalation.

CVE: CVE-2018-8025

Immediate action required: Upgrade to a CDH version with the fix, or, disable the HBase Thrift-over-HTTP service. Disabling the HBase Thrift-over-HTTP service will render Hue impersonation inoperable and all HBase access via Hue will be performed using the “hue” user instead of the authenticated user.

Knowledge article: For the latest update on this issue see the corresponding Knowledge article: TSB: 2018-315: Potential privilege escalation for user of HBase “Thrift 1” API Server over HTTP

HBase Metadata in ZooKeeper Can Lack Proper Authorization Controls

In certain circumstances, HBase does not properly set up access control in ZooKeeper. As a result, any user can modify this metadata and perform attacks, including service denial, or cause data loss in a replica cluster. Clusters configured using Cloudera Manager are not vulnerable.

Products affected: HBase

Releases affected: All CDH 4 and CDH 5 versions prior to 4.7.2 , 5.0.7, 5.1.6, 5.2.6, 5.3.4, 5.4.3

Users affected: HBase users with security set up to use Kerberos

Date/time of detection: May 15, 2015

Severity (Low/Medium/High): High

Impact: An attacker could cause potential data loss in a replica cluster, or denial of service.

CVE: CVE-2015-1836

Immediate action required: To determine if your cluster is affected by this problem, open a ZooKeeper shell using hbase zkcli and check the permission on the /hbase znode, using getAcl /hbase.

If the output reads: 'world,'anyone : cdrwa, any unauthenticated user can delete or modify HBase znodes.

- Change the configuration to use hbase.zookeeper.client.keytab.file on Master and RegionServers.

- Edit hbase-site.xml (which should be in /etc/hbase/) and add:

<property> <name>hbase.zookeeper.client.keytab.file</name> <value>hbase.keytab</value> </property>

- Edit hbase-site.xml (which should be in /etc/hbase/) and add:

- Do a rolling restart of HBase (Master and RegionServers), and wait until it has completed.

- To manually fix the ACLs, form a zkcli running as hbase user to have world with only read, and sasl/hbase with cdrwa.

(Some znodes in the list might not be present in your setup, so ignore the "node not found" exceptions.)

$ hbase zkcli setAcl /hbase world:anyone:r,sasl:hbase:cdrwa setAcl /hbase/backup-masters sasl:hbase:cdrwa setAcl /hbase/draining sasl:hbase:cdrwa setAcl /hbase/flush-table-proc sasl:hbase:cdrwa setAcl /hbase/hbaseid world:anyone:r,sasl:hbase:cdrwa setAcl /hbase/master world:anyone:r,sasl:hbase:cdrwa setAcl /hbase/meta-region-server world:anyone:r,sasl:hbase:cdrwa setAcl /hbase/namespace sasl:hbase:cdrwa setAcl /hbase/online-snapshot sasl:hbase:cdrwa setAcl /hbase/region-in-transition sasl:hbase:cdrwa setAcl /hbase/recovering-regions sasl:hbase:cdrwa setAcl /hbase/replication sasl:hbase:cdrwa setAcl /hbase/rs sasl:hbase:cdrwa setAcl /hbase/running sasl:hbase:cdrwa setAcl /hbase/splitWAL sasl:hbase:cdrwa setAcl /hbase/table sasl:hbase:cdrwa setAcl /hbase/table-lock sasl:hbase:cdrwa setAcl /hbase/tokenauth sasl:hbase:cdrwa

Addressed in release/refresh/patch: An update will be provided when solutions are in place.

For updates on this issue, see the corresponding Knowledge article:

TSB 2015-65: HBase Metadata in ZooKeeper can lack proper Authorization Controls

Apache Hadoop and Apache HBase "Man-in-the-Middle" Vulnerability

The Apache Hadoop and HBase RPC protocols are intended to provide bi-directional authentication between clients and servers. However, a malicious server or network attacker can unilaterally disable these authentication checks. This allows for potential reduction in the configured quality of protection of the RPC traffic, and privilege escalation if authentication credentials are passed over RPC.

- Hadoop

- HBase

- All versions of CDH 4.3.x prior to 4.3.1

- All versions of CDH 4.2.x prior to 4.2.2

- All versions of CDH 4.1.x prior to 4.1.5

- All versions of CDH 4.0.x

- Users of HDFS who have enabled Hadoop Kerberos security features and HDFS data encryption features.

- Users of MapReduce or YARN who have enabled Hadoop Kerberos security features.

- Users of HBase who have enabled HBase Kerberos security features and who run HBase co-located on a cluster with MapReduce or YARN.

Date/time of detection: June 10th, 2013

Severity: Severe

Impact:

RPC traffic from Hadoop clients, potentially including authentication credentials, may be intercepted by any user who can submit jobs to Hadoop. RPC traffic from HBase clients to Region Servers may be intercepted by any user who can submit jobs to Hadoop.

CVE: CVE-2013-2192 (Hadoop) and CVE-2013-2193 (HBase)

-

Users of CDH 4.3.0 should immediately upgrade to CDH 4.3.1 or higher.

-

Users of CDH 4.2.x should immediately upgrade to CDH 4.2.2 or higher.

-

Users of CDH 4.1.x should immediately upgrade to CDH 4.1.5 or higher.

ETA for resolution: August 23, 2013

Addressed in release/refresh/patch: CDH 4.1.5, CDH 4.2.2, and CDH 4.3.1.

Verification:

To verify that you are not affected by this vulnerability, ensure that you are running a version of CDH at or higher than the aforementioned versions. To verify that this is true, proceed as follows.

-

On RPM-based systems (RHEL, SLES) rpm -qi hadoop | grep -i version

- On Debian-based systems

dpkg -s hadoop | grep -i version

Apache Hive

This section lists the security bulletins that have been released for Apache Hive.

Apache Hive Vulnerabilities CVE-2018-1282 and CVE-2018-1284

This security bulletin covers two vulnerabilities discovered in Hive.

CVE-2018-1282: JDBC driver is susceptible to SQL injection attack if the input parameters are not properly cleaned

This vulnerability allows carefully crafted arguments to be used to bypass the argument-escaping and clean-up that the Apache Hive JDBC driver does with PreparedStatement objects.

If you use Hive in CDH, you have the option of using the Apache Hive JDBC driver or the Cloudera Hive JDBC driver, which is distributed by Cloudera for use with your JDBC applications. Cloudera strongly recommends that you use the Cloudera Hive JDBC driver and offers only limited support for the Apache Hive JDBC driver. If you use the Cloudera Hive JDBC driver you are not affected by this vulnerability.

Mitigation: Upgrade to use the Cloudera Hive JDBC driver, or perform the following actions in your Apache Hive JDBC client code or application when dealing with user provided input in PreparedStatement objects:

- Avoid passing user input with the PreparedStatement.setBinaryStream method, and

- Sanitize user input for the PreparedStatement.setString method by replacing all occurrences of \' (backslash, single quotation mark) to ' (single quotation mark).

Detected by: CVE-2018-1282 was detected by Bear Giles of Snaplogic

CVE-2018-1284: Hive UDF series UDFXPathXXXX allows users to pass carefully crafted XML to access arbitrary files

If Hive impersonation is disabled and / or Apache Sentry is used, a malicious user might use any of the Hive xpath UDFs to expose the contents of a file on the node that is running HiveServer2 which is owned by the HiveServer2 user (usually hive).

Mitigation: Upgrade to a release where this is fixed. If xpath functions are not currently used, disable them with Cloudera Manager by setting the hive.server2.builtin.udf.blacklist property to xpath,xpath_short,xpath_int,xpath_long,xpath_float,xpath_double,xpath_number,xpath_string in the HiveServer2 Advanced Configuration Snippet (Safety Valve) for hive-site.xml. For more information about setting this property to blacklist Hive UDFs, see the Cloudera Documentation.

Products affected: Hive

Releases affected:

- CDH 5.12 and earlier

- CDH 5.13.0, 5.13.1, 5.13.2, 5.13.3

- CDH 5.14.0, 5.14.1, 5.14.2

Users affected: All

Severity (Low/Medium/High): High

Impact: SQL injection, compromise of the hive user account

CVE: CVE-2018-1282, CVE-2018-1284

Immediate action required: Upgrade to a CDH release with the fix or perform the above mitigations.

Addressed release/refresh/patch: This will be fixed in a future release.

For the latest update on these issues, see the corresponding Knowledge article:

TSB 2018-299: Hive Vulnerabilities CVE-2018-1282 and CVE-2018-1284

Apache Hive SSL Vulnerability Bug Disclosure

- SSL is not turned on, or

- SSL is turned on but only non-self-signed certificates are used.

If neither of the above statements describe your deployment, please read on.

In CDH 5.2 and later releases, the CVE-2016-3083: Apache Hive SSL vulnerability bug disclosure impacts applications and tools that use:

- Apache JDBC driver with SSL enabled, or

-

Cloudera Hive JDBC drivers with self-signed certificates and SSL enabled

The certificate must be self-signed. A certificate signed by a trusted (or untrusted) Certificate Authority (CA) is not impacted by this vulnerability.

Cloudera does not recommend the use of self-signed certificates.

The CVE-2016-3083: Apache Hive SSL vulnerability is fixed by HIVE-13390 and is documented in the Apache community as follows:

"Apache Hive (JDBC + HiveServer2) implements SSL for plain TCP and HTTP connections (it supports both transport modes). While validating the server's certificate during the connection setup, the client doesn't seem to be verifying the common name attribute of the certificate. In this way, if a JDBC client sends an SSL request to server abc.example.com, and the server responds with a valid certificate (certified by CA) but issued to xyz.example.com, the client will accept that as a valid certificate and the SSL handshake will go through."

This means that it would be possible to set up a man-in-the-middle attack to intercept all SSL-protected JDBC communication.

CDH Hive users have the option of deploying either the Apache Hive JDBC driver or the Cloudera Hive JDBC driver that is distributed by Cloudera for use with their JDBC applications. Traditionally, Cloudera has strongly recommended use of the Cloudera Hive JDBC driver — and offers limited support for the Apache Hive JDBC driver. The JDBC jars in the CLASSPATH environment variable can be examined to determine which JDBC driver is in use. If the hive-jdbc-1.1.0-cdh<CDH_VERSION>.jar is included in the CLASSPATH, the Apache JDBC driver is being used. If the HiveJDBC4.jar or the HiveJDBC41.jar is in the CLASSPATH, that indicates the Cloudera Hive JDBC driver is being used.

JDBC drivers can also be used in an embedded mode. For example, when connecting to HiveServer2 by way of tools such as Beeline, the JDBC Client is invoked internally over the Thrift API. The JDBC driver in use by Beeline can also be determined by examining the driver version information printed after the connection is established.

If the output shows:

- Hive JDBC (version 1.1.0-cdh<CDH_VERSION>), the Apache JDBC driver is being used.

- Driver: HiveJDBC (version 02.05.18.1050), the Cloudera Hive JDBC Driver is being used.

hive.server2.use.SSL=true

hive.server2.enable.SSL=true

This information can be used to decide whether a tool or application is impacted by this vulnerability.

For Cloudera Hive JDBC drivers with self-signed certificates and SSL enabled: Generate non-self-signed certificates according to the following documentation: https://www.cloudera.com/documentation/enterprise/latest/topics/cm_sg_create_deploy_certs.html

For Apache JDBC drivers with SSL enabled: You can switch to use the Cloudera Hive JDBC driver. Note that the Cloudera Hive JDBC driver only displays query results and skips displaying informational messages such as those logged by MapReduce jobs (that are invoked as part of executing the JDBC command). For example:

INFO : Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

INFO : 2017-06-06 14:19:41,115 Stage-1 map = 0%, reduce = 0%

INFO : 2017-06-06 14:19:48,427 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.87 sec

INFO : 2017-06-06 14:19:55,845 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.75 sec

INFO : MapReduce Total cumulative CPU time: 3 seconds 750 msec

INFO : Ended Job = job_1496750846200_0001

INFO : MapReduce Jobs Launched:

INFO : Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 3.75 sec HDFS Read: 31539 HDFS Write: 3 SUCCESS

INFO : Total MapReduce CPU Time Spent: 3 seconds 750 msec

The steps required to switch to using the Cloudera Hive JDBC driver for Beeline are:

- Download the latest Cloudera Hive JDBC driver from: https://www.cloudera.com/downloads/connectors/hive/jdbc/2-5-18.html

-

Unzip the archive:

unzip Cloudera_HiveJDBC41_2.5.18.1050.zip -

Add the HiveJDBC41.jar to the beginning of the CLASSPATH:

export HIVE_CLASSPATH=/root/HiveJDBC41.jar:$HIVE_CLASSPATH - Execute Beeline, but change the connection URL according to the Cloudera driver documentation at the following location: http://www.cloudera.com/documentation/other/connectors/hive-jdbc/latest/Cloudera-JDBC-Driver-for-Apache-Hive-Install-Guide.pdf

- Confirm the change by checking the driver version when connecting to HiveServer2 with Beeline:

Connecting to jdbc:hive2:://<HOST>:10000 Connected to: Apache Hive (version 1.1.0-cdh<CDH_VERSION>) Driver: HiveJDBC (version 02.05.18.1050) - The following error message that is displayed can be ignored:

Error: [Cloudera] [JDBC] (11975) Unsupported transaction isolation level: 4. (state=HY000,code=11975)

For other third-party tools and applications, replace the Apache JDBC driver as follows:

-

Add the HiveJDBC41.jar to the beginning of the CLASSPATH for the application:

export CLASSPATH=/root/hiveJDBC41.jar:$CLASSPATH -

Change the JDBC connection URL according to the Cloudera driver documentation located at:

http://www.cloudera.com/documentation/other/connectors/hive-jdbc/latest/Cloudera-JDBC-Driver-for-Apache-Hive-Install-Guide.pdf

Products affected: Hive

- CDH 5.2.0, 5.2.1, 5.2.3, 5.2.4, 5.2.5, 5.2.6

- CDH 5.3.0, 5.3.1, 5.3.2, 5.3.3, 5.3.4, 5.3.5, 5.3.6, 5.3.8, 5.3.9, 5.3.10

- CDH 5.4.0, 5.4.1, 5.4.2, 5.4.3, 5.4.4, 5.4.5, 5.4.7, 5.4.8, 5.4.9, 5.4.10, 5.4.11

- CDH 5.5.0, 5.5.1, 5.5.2, 5.5.4, 5.5.5, 5.5.6

- CDH 5.6.0, 5.6.1

- CDH 5.7.0, 5.7.1, 5.7.2, 5,7.3, 5.7.4, 5.7.5, 5.7.6

- CDH 5.8.0, 5.8.2, 5.8.3, 5.8.4, 5.8.5

- CDH 5.9.0, 5.9.1, 5.9.2

- CDH 5.10.0, 5.10.1

- CDH 5.11.0

Users affected: JDBC (Apache Hive JDBC driver using SSL or Cloudera Hive JDBC driver with self-signed certificates) and HiveServer2 users

Detected by: Branden Crawford from Inteco Systems Limited

Severity (Low/Medium/High): Medium

Impact: As discussed above.

CVE: CVE-2016-3083

- For non-Beeline clients (including third-party tools or applications): If Apache Hive JDBC drivers are being used, switch to Cloudera JDBC drivers (and use externally signed CA certs as always recommended for production use).

- For Beeline (or Beeline-based clients, e.g. Oozie): Update Beeline’s embedded Apache JDBC driver to Cloudera JDBC driver as shown above. Alternatively, if these JDBC-based clients are invoked within a CDH cluster, upgrade the cluster to a release where the issue has been addressed.

Addressed release/refresh/patch: CDH5.11.1 and later

For the latest update on this issue, see the corresponding Knowledge article:

Hive built-in functions “reflect”, “reflect2”, and “java_method” not blocked by default in Sentry

Sentry does not block the execution of Hive built-in functions “reflect”, “reflect2”, and “java_method” by default in some CDH versions. These functions allow the execution of arbitrary user code, which is a security issue.

This issue is documented in SENTRY-960.

Products affected: Hive, Sentry

Releases affected:

CDH 5.4.0, CDH 5.4.1, CDH 5.4.2, CDH 5.4.3, CDH 5.4.4, CDH 5.4.5, CDH 5.4.6, CDH 5.4.7, CDH 5.4.8, CDH 5.5.0, CDH 5.5.1

Users affected: Users running Sentry with Hive.

Date/time of detection: November 13, 2015

Severity (Low/Medium/High): High

Impact: This potential vulnerability may enable an authenticated user to execute arbitrary code as a Hive superuser.

CVE: CVE-2016-0760

Immediate action required: Explicitly add the following to the blacklist property in hive-site.xml of Hive Server2:

<property>

<name>hive.server2.builtin.udf.blacklist</name>

<value>reflect,reflect2,java_method </value>

</property>

Addressed in release/refresh/patch: CDH 5.4.9, CDH 5.5.2, CDH 5.6.0 and higher

HiveServer2 LDAP Provider May Allow Authentication with Blank Password

Hive may allow a user to authenticate without entering a password, depending on the order in which classes are loaded.

Specifically, Hive's SaslPlainServerFactory checks passwords, but the same class provided in Hadoop does not. Therefore, if the Hadoop class is loaded first, users can authenticate with HiveServer2 without specifying the password.

Products affected: Hive

Releases affected:

-

CDH 5.0, 5.0.1, 5.0.2, 5.0.3, 5.0.4, 5.0.5

-

CDH 5.1, 5.1.2, 5.1.3, 5.1.4

-

CDH 5.2, 5.2.1, 5.2.3, 5.2.4

-

CDH 5.3, 5.3.1, 5.3.2

-

CDH 5.4.1, 5.4.2, 5.4.3

Users affected: All users using Hive with LDAP authentication.

Date/time of detection: March 11, 2015

Severity: (Low/Medium/High) High

Impact: A malicious user may be able to authenticate with HiveServer2 without specifying a password.

CVE: CVE-2015-1772

Immediate action required: Upgrade to CDH 5.4.4, 5.3.3, 5.2.5, 5.1.5, or 5.0.6

Addressed in release/refresh/patch: CDH 5.4.4, 5.3.3, 5.2.5, 5.1.5, or 5.0.6

For more updates on this issue, see the corresponding Knowledge article:

HiveServer2 LDAP Provider may Allow Authentication with Blank Password

Hue

This section lists the security bulletins that have been released for Hue.

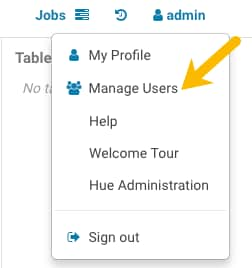

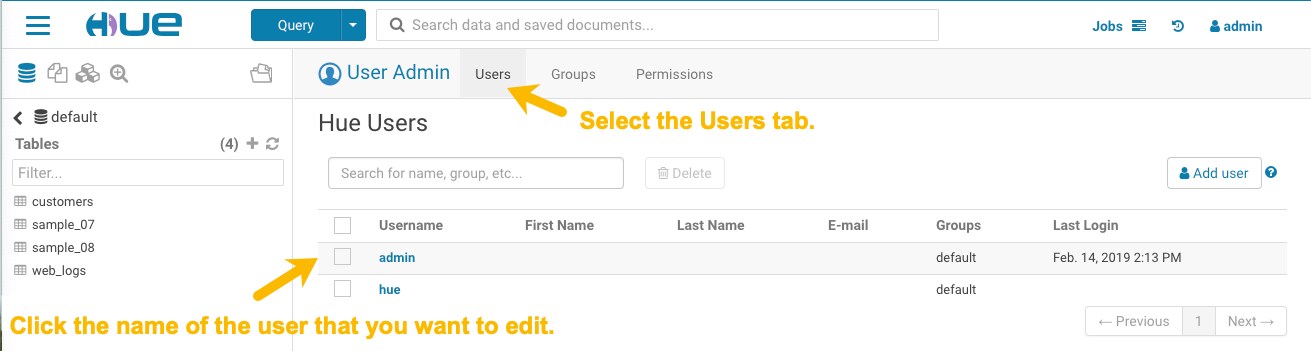

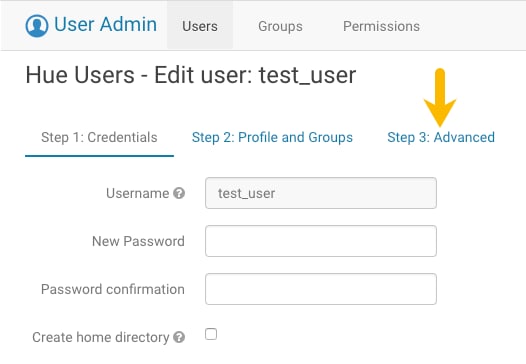

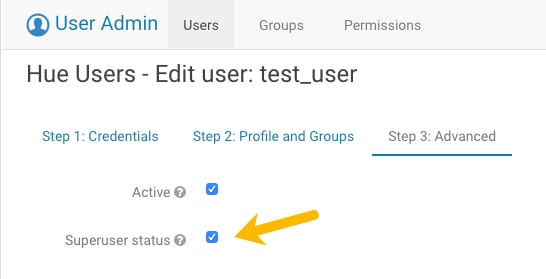

Hue external users granted super user priviliges in C6

When using either the LdapBackend or the SAML2Backend authentication backends in Hue, users that are created on login when logging in for the first time are granted superuser privileges in CDH 6. This does not apply to users that are created through the User Admin application in Hue.

Products affected: Hue

Releases affected: CDH 6.0.0, CDH 6.0.1, CDH 6.1.0

Users affected: All user

Date/time of detection: Dec/12/18

Severity (Low/Medium/High): Medium

Impact:

The superuser privilege is granted to any user that logs in to Hue when LDAP or SAML authentication is used. For example, if you have the create_users_on_login property set to true in the Hue Service Advanced Configuration Snippet (Safety Valve) for hue_safety_valve.ini, and you are using LDAP or SAML authentication, a user that logs in to Hue for the first time is created with superuser privileges and can perform the following actions:

- Create/Delete users and groups

- Assign users to groups

- Alter group permissions

- Synchronize Hue users with your LDAP server

- Create local users and groups (these local users can login to Hue only if the mode of multi-backend authentication is set up as LdapBackend and AllowFirstUserDjangoBackend)

- Assign users to groups

- Alter group permissios

- When users are synced with your LDAP server manually by using the User Admin page in Hue.

- When you are using other authentication methods. For example:

- AllowFirstUserDjangoBackend

- Spnego

- PAM

- Oauth

- Local users, including users created by unexpected superusers, can login throug AllowFirstUserDjangoBackend.

- Local users in Hue that created as hive, hdfs, or solr have privileges to access protected data and alter permissions in security app.

- Removing the AllowFirstUserDjangoBackend authentication backend can stop local users login to Hue, but it requires the administrator to have Cloudera Manager access

CVE: CVE-2019-7319

Immediate action required: Upgrade and follow the instructions below.

Addressed in release/refresh/patch: CDH 6.1.1 and CDH 6.2.0

UPDATE useradmin_userprofile SET `creation_method` = 'EXTERNAL' WHERE `creation_method` = 'CreationMethod.EXTERNAL';

After executing the UPDATE statement, new Hue users are no longer automatically created as superusers.

To find out the list of superusers, run SQL query: