HDFS Replication Tuning

You can configure the number of chunks that will be generated by setting the following base parameters:

- distcp.dynamic.max.chunks.ideal

-

The “ideal” chunk count. Identifies the goal for how many chunks need to be configured. (The default value is 100.)

- distcp.dynamic.min.records_per_chunk

-

The minimum number of records per chunk. Ensures that each chunk is at least a certain size (The default value is 5.)

The distcp.dynamic.max.chunks.ideal parameter is the most important, and controls how many chunks are generated in the general case. The distcp.dynamic.min.records_per_chunk parameter identifies the minimum number of records that are packed into a chunk. This effectively lowers the number of chunks created if the computed records per chunk falls below this number.

- distcp.dynamic.max.chunks.tolerable

-

The maximum chunk count. Set to a value that is greater than or equal to the value set for distcp.dynamic.max.chunks.ideal. Identifies the maximum number of chunks that are allowed to be generated. An error condition is triggered if your configuration causes this value to be exceeded. (The default value is 400.)

- distcp.dynamic.split.ratio

- Validates that each mapper has at least a certain number of chunks. (The default value is 2.)

- If you use the default values for all parameters, and you have 500 files in your replication and 20 mappers, the replication job generates 100 chunks with 5 files in each replication (and 5 chunks per mapper, on average).

- If you have only 200 total files, the replication job automatically packages them into 40 chunks to satisfy the requirement defined by the distcp.dynamic.min.records_per_chunk parameter.

-

If you used 250 mappers, then the default value of 2 chunks per mapper configured with the distcp.dynamic.split.ratio parameter leads to requiring 500 total chunks, which would be higher than the maximum set by the distcp.dynamic.max.chunks.tolerable parameter number of 400, and will trigger an error.

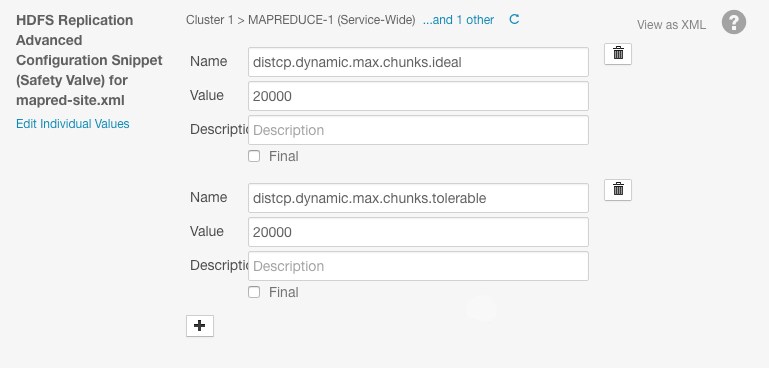

- Open the Cloudera Manager Admin Console for the destination cluster and go to the MapReduce service for your cluster.

- Click the Configuration tab.

- Search for the HDFS Replication Advanced Configuration Snippet (Safety Valve) for mapred-site.xml property.

- Click

to add each of the following new properties:

to add each of the following new properties:

- distcp.dynamic.max.chunks.ideal

Set the ideal number for the total chunks generated. (Default value is 100.)

- distcp.dynamic.max.chunks.tolerable

Set the upper limit for how many chunks are generated. (Default value is 400.)

- distcp.dynamic.max.chunks.ideal

- Set the value of the properties to a value greater than 10,000. Tune these properties so that the number of files per chunk is greater than the value set for the distcp.dynamic.min.records_per_chunk property. Set each property to the same value.