How to Configure Security for Amazon S3

Cloudera CDH clusters can use the Amazon Simple Storage Service (S3) as a persistent data store for a variety of workloads and use cases. For example, as detailed in Configuring Transient Hive ETL Jobs to Use the Amazon S3 Filesystem, you can use S3 for both source and target data from transient extract-transform-load operations.

Security for Amazon S3 storage encompasses authentication, authorization, and encryption mechanisms, as detailed below:

Authentication Using the S3 Connector Service

As of CDH 5.10, integration with Amazon S3 from Cloudera clusters has been simplified with the addition of the S3 Connector Service, which automates the authentication process to S3 for Impala and Hue. This optional service can be added to the cluster using the Cloudera Manager Admin Console. This new feature supports long-running multi-tenant Impala clusters and is intended for business analytics use cases. Adding the S3 Connector Service to a cluster also activates an S3 Browser for Hue that provides web-based access to data on S3.

The S3 Connector Service transparently handles distribution of the credentials for Amazon S3, and so it can only be added to a secure cluster—a cluster that uses Kerberos for authentication and that uses Sentry for role-based authorization. A single set of AWS credentials configured for the cluster is used to define Impala tables (backed by S3 data), for example, with permissions applied as configured in Sentry.

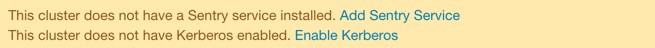

The S3 Connector Service cannot be added to a non-secure cluster. Attempting to add the service to a non-secure cluster raises error messages in the Cloudera Manager Admin Console for

each missing item, as shown here:

In addition, Cloudera recommends that TLS/SSL be enabled for the cluster. See How to Configure TLS Encryption for Cloudera Manager for details.

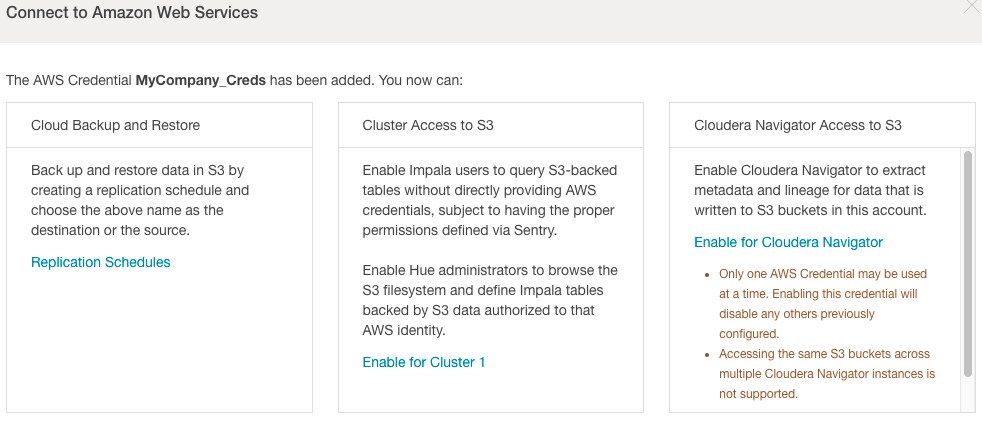

Assuming your cluster meets the security requirements above, after adding your AWS credential to the cluster, the Cloudera Manager Admin Console provides three options for using them, as shown below:

Select Cluster Access to S3 to use with Impala and Hue. You can also select Enable for Cloudera Navigator, so that Navigator Metadata Server can access metadata about objects stored on S3.

Authentication Using Advanced Configuration Snippets

- Never share the credentials with other cluster users or services.

- Make sure they are not stored in cleartext in any configuration files.

- Use Cloudera sensitive data redaction to ensure that these do not appear in log files.

Hadoop clusters support integration with Amazon S3 storage through the hadoop-aws module, also known as the hadoop-aws connector. There have been a few iterations of this module, but CDH supports the most recent implementation (s3a) only. This connector is used behind the scenes by the S3 Connector Service.

To enable CDH services to access Amazon S3, AWS credentials can be specified using the fs.s3a.access.key and fs.s3a.secret.key properties:

<property>

<name>fs.s3a.access.key</name>

<value>your_access_key</value>

</property>

<property>

<name>fs.s3a.secret.key</name>

<value>your_secret_key</value>

</property>

The process to add these properties is the same as that detailed in configure server-side encryption.

As an alternative to configuring the core-site.xml, a somewhat more secure approach is to use temporary credentials, which include a session token that limits the viability of the credentials to a set time-frame (and thus offers a smaller window within which a key can be stolen and used).

Using Temporary Credentials for Amazon S3

The AWS Security Token Service (STS) issues temporary credentials to access AWS services such as S3. These temporary credentials include an access key, a secret key, and a session token that expires within a configurable amount of time. Requesting temporary credentials is not currently handled transparently by CDH, so admins need to obtain them directly from Amazon STS. For details, see Temporary Security Credentials in the AWS Identity and Access Management Guide.

-Dfs.s3a.access.key=your_temp_access_key -Dfs.s3a.secret.key=your_temp_secret_key -Dfs.s3a.session.token=your_session_token_from_AmazonSTS -Dfs.s3a.aws.credentials.provider=org.apache.hadoop.fs.s3a.TemporaryAWSCredentialsProvider

Configuration Properties for Amazon S3

This table provides reference documentation for some of the core-site.xml properties that are relevant for the hadoop-aws connector.

| Property | Default | Description |

|---|---|---|

| fs.s3n.server-side-encryption-algorithm | None | Enable server-side encryption for S3 data and specify the encryption algorithm. The only entry allowed is AES256 (256-bit Advanced Encryption Standard), the block cipher used for server-side encryption. See Enabling Data At-Rest Encryption for Amazon S3 for details. |

| fs.s3a.awsAccessKeyId | None | Specify the AWS access key ID, or omit this property if authentication to S3 using role-based authentication (IAM). |

| fs.s3a.awsSecretAccessKey | None | Specify the AWS secret key provided by Amazon, or omit this property for role-based authentication (IAM). |

| fs.s3a.connection.ssl.enabled | true | Enables (true) and disables (false) TLS/SSL connections to S3. |

Connecting to Amazon S3 Using TLS

The boolean parameter fs.s3a.connection.ssl.enabled in core-site.xml controls whether the hadoop-aws connector uses TLS when communicating with Amazon S3. Because this parameter is set to true by default, you do not need to configure anything to enable TLS. If you are not using TLS on Amazon S3, the connector will automatically fall back to a plaintext connection.

The root Certificate Authority (CA) certificate that signed the Amazon S3 certificate is trusted by default. If you are using custom truststores, make sure that the configured truststore for each service trusts the root CA certificate.

To import the root CA certificate into your custom truststore, run the following command:

$ $JAVA_HOME/bin/keytool -importkeystore -srckeystore $JAVA_HOME/jre/lib/security/cacerts -destkeystore /path/to/custom/truststore -srcalias baltimorecybertrustca

If you do not have the $JAVA_HOME variable set, replace it with the path to the Oracle JDK (for example, /usr/java/jdk1.7.0_67-cloudera/). When prompted, enter the password for the destination and source truststores. The default password for the Oracle JDK cacerts truststore is changeit.

The truststore configurations for each service that accesses S3 are as follows:

hadoop-aws Connector

All components that can use Amazon S3 storage rely on the hadoop-aws connector, which uses the built-in Java truststore ($JAVA_HOME/jre/lib/security/cacerts). To override this truststore, create a truststore named jssecacerts in the same directory ($JAVA_HOME/jre/lib/security/jssecacerts) on all cluster nodes. If you are using the jssecacerts truststore, make sure that it includes the root CA certificate that signed the Amazon S3 certificate.

Hive/Beeline CLI

The Hive and Beeline command line interfaces (CLI) rely on the HiveServer2 truststore. To view or modify the truststore configuration:

- Go to the Hive service in the Cloudera Manager Admin Interface.

- Select the Configuration tab.

- Select .

- Select .

- Locate the HiveServer2 TLS/SSL Certificate Trust Store File and HiveServer2 TLS/SSL Certificate Trust Store Password properties or search for them by typing Trust in the Search box.

Impala Shell

The Impala shell uses the hadoop-aws connector truststore. To override it, create the $JAVA_HOME/jre/lib/security/jssecacerts file, as described in hadoop-aws Connector.

Hue S3 File Browser

For instructions on enabling the S3 file browser in Hue, see How to Enable S3 Cloud Storage in Hue. The S3 file browser uses TLS if it is enabled, and the S3 File Browser trusts the S3 certificate by default. No additional configuration is necessary.

Impala Query Editor (Hue)

The Impala query editor in Hue uses the hadoop-aws connector truststore. To override it, create the $JAVA_HOME/jre/lib/security/jssecacerts file, as described in hadoop-aws Connector.

Hive Query Editor (Hue)

The Hive query editor in Hue uses the HiveServer2 truststore. For instructions on viewing and modifying the HiveServer2 truststore, see Hive/Beeline CLI.

Data At-Rest Encryption on Amazon S3

Cloudera CDH supports Amazon S3 server-side data-at-rest encryption (see Protecting Data Using Server-Side Encryption with Amazon S3-Managed Encryption Keys (SSE-S3) for details). The hadoop-aws driver transparently submits the request to read and write to Amazon S3 using server-side encryption with Amazon S3-managed encryption keys. You do not need to do anything special, other than enabling the mechanism in the cluster-wide configuration file (core-site.xml), as follows:

- Log into the Cloudera Manager Admin Console.

- Select .

- Click the Configuration tab.

- Select .

- Select .

- Locate the Cluster-wide Advanced Configuration Snippet (Safety Valve) for core-site.xml property.

- In the text field, define the server-side encryption algorithm property and its value as shown below:

<property> <name>fs.s3a.server-side-encryption-algorithm</name> <value>AES256</value> </property> - Click Save Changes.

- Restart the HDFS service.