Cloudera Security Overview

Any system managing data today must meet security requirements imposed by government and industry regulations, and by the public whose information is held in such systems. As a system designed to support ever-increasing amounts and types of data, Cloudera's Hadoop core and ecosystem components must meet ever-evolving security requirements and be capable of thwarting a variety of attacks against that data.

This overview includes brief discussion of security requirements and an approach to securing the enterprise data hub in phases, followed by an introduction to Hadoop security architecture. Following these are more detailed overview of the following:

Security Requirements

Security encompasses broad business and operational goals that can be met by various technologies and processes:

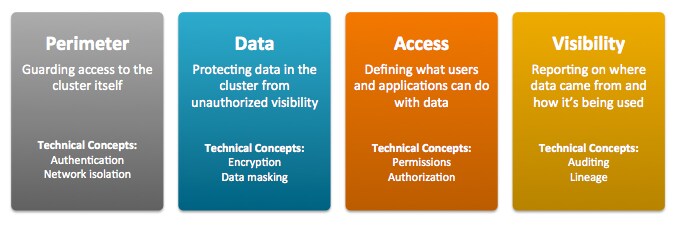

- Perimeter Access to the cluster, its data and services is provided to specific users, services, and processes. In information security, Authentication can help ensure that only validated users and processes are allowed entry to the cluster, node, or other protected target.

- Data Protection means that whether data is at rest (on the storage system), or in transit (moving from client to server or peer to peer, over the network), it cannot be read or altered, even if it is intercepted. In information security, Encryption helps meet this requirement.

- Access includes defining privileges for users, applications, and processes, and enforcing what they can do within the system and its data. In information security, Authorization mechanisms are designed to meet this requirement.

- Visibility or transparency means that data can be fully monitored, including where, when, and how data is used or modified. In information security, Auditing mechanisms can meet of these and other requirements related to data governance.

The Hadoop ecosystem covers a wide range of applications, datastores, and computing frameworks, and each of these security components manifest these operational capabilities differently.

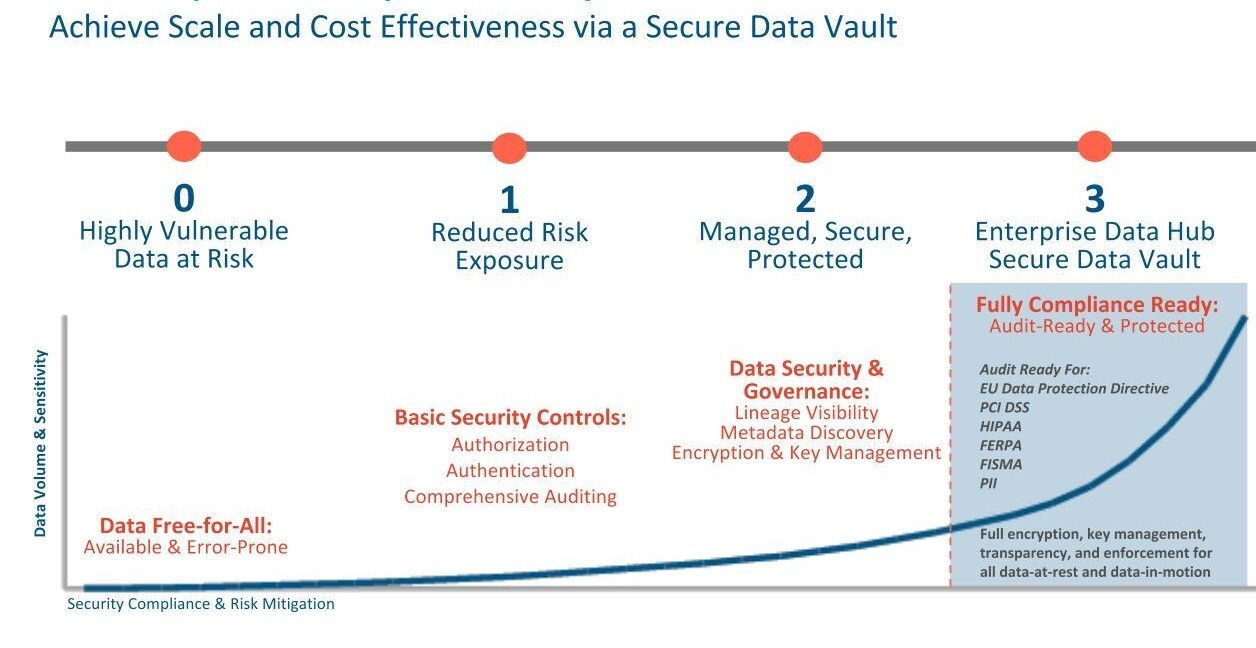

Securing a Hadoop Cluster in Phases

- Phase 0: A non-secure cluster. Set up a fully functioning cluster without any security. Never use such a system in a production environment: it is vulnerable to any and all attacks and exploits.

- Phase 1: A minimally secure cluster includes authentication, authorization, and auditing. First, configure authentication so that users and services cannot access the cluster until they prove their identities. Next, configure simple authorization mechanisms that let you assign privileges to users and user groups. Set up auditing procedures to keep track of who accesses the cluster (and how).

- Phase 2: For more robust security, encrypt sensitive data at a minimum. Use key-management systems to handle encryption keys. Set up auditing on data in metastores, and regularly review and update the system metadata. Ideally, set up your cluster so that you can trace the lineage of any data object and meet any goals that may fall under the rubric of data governance.

- Phase 3: Most secure. The secure enterprise data hub (EDH) is one in which all data, both data-at-rest and data-in-transit,

is encrypted and the key management system is fault-tolerant. Auditing mechanisms comply with industry, government, and regulatory standards (PCI, HIPAA, NIST, for example), and extend from the EDH

to the other systems that integrate with it. Cluster administrators are well-trained, and security procedures have been certified by an expert.

Implementing all three phases or levels of security ensures that your Cloudera EDH cluster can pass technical review and comply with the strictest of regulations.

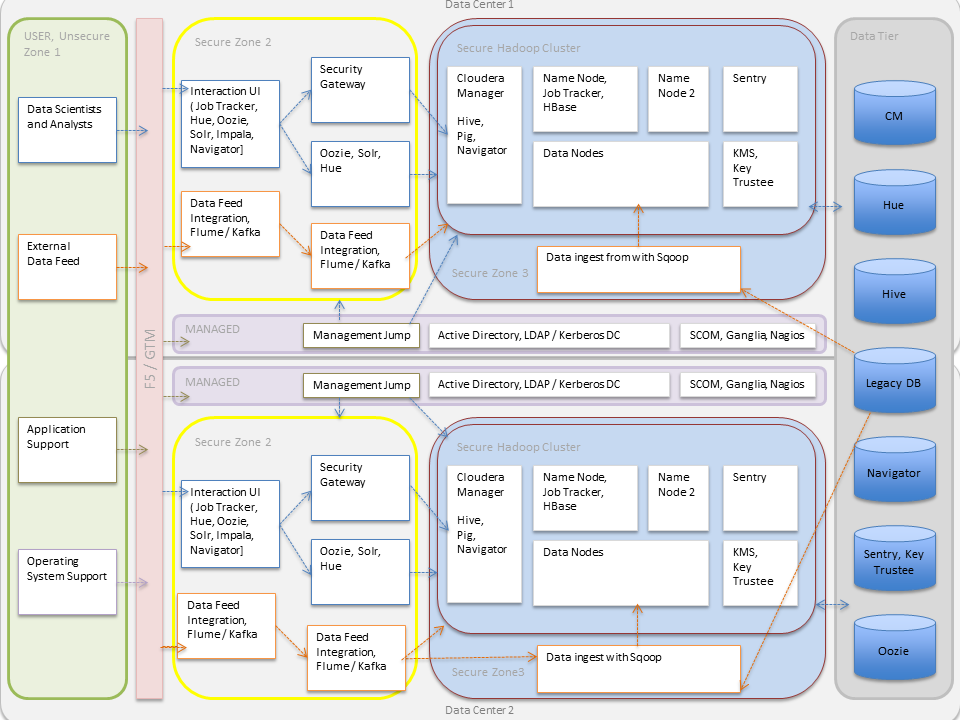

Hadoop Security Architecture

- As illustrated, external data streams can be authenticated by mechanisms in place for Flume and Kafka. Any data from legacy databases is ingested using Sqoop. Users such as data scientists and analysts can interact directly with the cluster using interfaces such as Hue or Cloudera Manager. Alternatively, they could be using a service like Impala for creating and submitting jobs for data analysis. All of these interactions can be protected by an Active Directory Kerberos deployment.

- Encryption can be applied to data at-rest using transparent HDFS encryption with an enterprise-grade Key Trustee Server. Cloudera also recommends using Navigator Encrypt to protect data on a cluster associated with the Cloudera Manager, Cloudera Navigator, Hive and HBase metastores, and any log files or spills.

- Authorization policies can be enforced using Sentry (for services such as Hive, Impala and Search) as well as HDFS Access Control Lists.

- Auditing capabilities can be provided by using Cloudera Navigator.