Encryption Overview

Cloudera provides various mechanisms to protect data persisted to disk or other storage media (data-at-rest) and to protect data as it moves among processes in the cluster or over the network (data-in-transit).

Data-at-rest encryption is more generally known as data encryption and has long been mandatory for many government, financial, and regulatory entities to meet privacy and other security requirements. For example, the card payment industry has adopted the Payment Card Industry Data Security Standard (PCI DSS) for information security. Other examples include requirements imposed by United States government's Federal Information Security Management Act (FISMA) and Health Insurance Portability and Accountability Act (HIPAA). These are just a few examples.

To help organizations comply with regulations such as these, Cloudera provides mechanisms to support data at rest and data in transit, all of which can be centrally deployed and managed for the cluster using Cloudera Manager Server. This section provides an overview and includes the following topics:

Protecting Data At-Rest

Protecting data at rest typically means encrypting the data when it is stored on disk and letting authorized users and processes—and only authorized users and processes—to decrypt the data when needed for the application or task at hand. With data-at-rest encryption, encryption keys must be distributed and managed, keys should be rotated or changed on a regular basis (to reduce the risk of having keys compromised), and many other factors complicate the process.

However, encrypting data alone may not sufficient. For example, administrators and others with sufficient privileges may have access to personally identifiable information (PII) in log files, audit data, or SQL queries. Depending on the specific use case—in hospital or financial environment, the PII may need to be redacted from all such files, to ensure that users with privileges on the logs and queries that might contain sensitive data are nonetheless unable to view that data when they should not.

Cloudera provides complementary approaches to encrypting data at rest, and provides mechanisms to mask PII in log files, audit data, and SQL queries.

Encryption Options Available

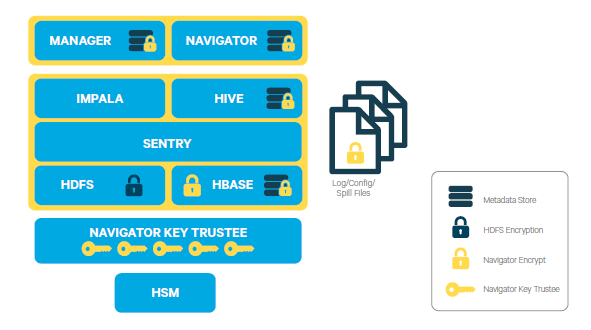

Cloudera provides several mechanisms to ensure that sensitive data is secure. CDH provides transparent HDFS encryption, ensuring that all sensitive data is encrypted before being stored on disk. HDFS encryption when combined with the enterprise-grade encryption key management of Navigator Key Trustee enables regulatory compliance for most enterprises. For Cloudera Enterprise, HDFS encryption can be augmented by Navigator Encrypt to secure metadata, in addition to data. Cloudera clusters that use these solutions run as usual and have very low performance impact, given that data nodes are encrypted in parallel. As the cluster grows, encryption grows with it.

Additionally, this transparent encryption is optimized for the Intel chipset for high performance. Intel chipsets include AES-NI co-processors, which provide special capabilities that make encryption workloads run extremely fast. Cloudera leverages the latest Intel advances for even faster performance.

The Key Trustee KMS, used in conjunction with Key Trustee Server and Key HSM, provides HSM-based protection of stored key material. The Key Trustee KMS generates encryption zone key material locally on the KMS and then encrypts this key material using an HSM-generated key. Navigator HSM KMS services, in contrast, rely on the HSM for all encryption zone key generation and storage. When using the Navigator HSM KMS, encryption zone key material originates on the HSM and never leaves the HSM. This allows for the highest level of key isolation, but requires some overhead for network calls to the HSM for key generation, encryption and decryption operations. The Key Trustee KMS remains the recommended key management solution for HDFS encryption for most production scenarios.

- Cloudera Transparent HDFS Encryption to encrypt data stored on HDFS

- Navigator Encrypt for all other data (including metadata, logs, and spill data) associated with Cloudera Manager, Cloudera Navigator, Hive, and HBase

- Navigator Key Trustee for robust, fault-tolerant key management

In addition to applying encryption to the data layer of a Cloudera cluster, encryption can also be applied at the network layer, to encrypt communications among nodes of the cluster. See Configuring Encryption for more information.

Encryption does not prevent administrators with full access to the cluster from viewing sensitive data. To obfuscate sensitive data, including PII, the cluster can be configured for data redaction.

Data Redaction for Cloudera Clusters

Redaction is a process that obscures data. It can help organizations comply with industry regulations and standards, such as PCI (Payment Card Industry) and HIPAA, by obfuscating personally identifiable information (PII) so that is not usable except by those whose jobs require such access. For example, HIPAA legislation requires that patient PII not be available to anyone other than appropriate physician (and the patient), and that any patient's PII cannot be used to determine or associate an individual's identity with health data. Data redaction is one process that can help ensure this privacy, by transforming PII to meaningless patterns—for example, transforming U.S. social security numbers to XXX-XX-XXXX strings.

Data redaction works separately from Cloudera encryption techniques, which do not preclude administrators with full access to the cluster from viewing sensitive user data. It ensures that cluster administrators, data analysts, and others cannot see PII or other sensitive data that is not within their job domain and at the same time, it does not prevent users with appropriate permissions from accessing data to which they have privileges.

See How to Enable Sensitive Data Redaction for details.

Protecting Data In-Transit

- HDFS Transparent Encryption: Data encrypted using HDFS Transparent Encryption is protected end-to-end. Any data written to and from HDFS can only be encrypted or decrypted by the client. HDFS does not have access to the unencrypted data or the encryption keys. This supports both, at-rest encryption as well as in-transit encryption.

- Data Transfer: The first channel is data transfer, including the reading and writing of data blocks to HDFS. Hadoop uses a SASL-enabled wrapper around its

native direct TCP/IP-based transport, called DataTransportProtocol, to secure the I/O streams within an DIGEST-MD5 envelope (For steps, see Configuring Encrypted HDFS Data Transport). This procedure also employs secured HadoopRPC (see Remote Procedure

Calls) for the key exchange. The HttpFS REST interface, however, does not provide secure communication between the client and HDFS, only secured authentication using SPNEGO.

For the transfer of data between DataNodes during the shuffle phase of a MapReduce job (that is, moving intermediate results between the Map and Reduce portions of the job), Hadoop secures the communication channel with HTTP Secure (HTTPS) using Transport Layer Security (TLS). See Encrypted Shuffle and Encrypted Web UIs.

- Remote Procedure Calls: The second channel is system calls to remote procedures (RPC) to the various systems and frameworks within a Hadoop cluster. Like data transfer activities, Hadoop has its own native protocol for RPC, called HadoopRPC, which is used for Hadoop API client communication, intra-Hadoop services communication, as well as monitoring, heartbeats, and other non-data, non-user activity. HadoopRPC is SASL-enabled for secured transport and defaults to Kerberos and DIGEST-MD5 depending on the type of communication and security settings. For steps, see Configuring Encrypted HDFS Data Transport.

- User Interfaces: The third channel includes the various web-based user interfaces within a Hadoop cluster. For secured transport, the solution is straightforward; these interfaces employ HTTPS.

TLS/SSL Certificates Overview

| Type | Usage Note |

|---|---|

| Public CA-signed certificates | Recommended. Using certificates signed by a trusted public CA simplifies deployment because the default Java client already trusts most public CAs. Obtain certificates from one of the trusted well-known (public) CAs, such as Symantec and Comodo, as detailed in Generate TLS Certificates |

| Internal CA-signed certificates | Obtain certificates from your organization's internal CA if your organization has its own. Using an internal CA can reduce costs (although cluster configuration may require establishing the trust chain for certificates signed by an internal CA, depending on your IT infrastructure). See Configuring TLS Encryption for Cloudera Manager for information about establishing trust as part of configuring a Cloudera Manager cluster. |

| Self-signed certificates | Not recommended for production deployments. Using self-signed certificates requires configuring each client to trust the specific certificate (in addition to generating and distributing the certificates). However, self-signed certificates are fine for non-production (testing or proof-of-concept) deployments. See How to Use Self-Signed Certificates for TLS for details. |

For more information on setting up SSL/TLS certificates, see Encrypting Data in Transit.

TLS/SSL Encryption for CDH Components

Cloudera recommends securing a cluster using Kerberos authentication before enabling encryption such as SSL on a cluster. If you enable SSL for a cluster that does not already have Kerberos authentication configured, a warning will be displayed.

- HDFS, MapReduce, and YARN daemons act as both SSL servers and clients.

- HBase daemons act as SSL servers only.

- Oozie daemons act as SSL servers only.

- Hue acts as an SSL client to all of the above.

For information on setting up SSL/TLS for CDH services, see Configuring TLS/SSL Encryption for CDH Services.

Data Protection within Hadoop Projects

The table below lists the various encryption capabilities that can be leveraged by CDH components and Cloudera Manager.

| Project | Encryption for Data-in-Transit | Encryption for Data-at-Rest

(HDFS Encryption + Navigator Encrypt + Navigator Key Trustee) |

|---|---|---|

| HDFS | SASL (RPC), SASL (DataTransferProtocol) | Yes |

| MapReduce | SASL (RPC), HTTPS (encrypted shuffle) | Yes |

| YARN | SASL (RPC) | Yes |

| Accumulo | Partial - Only for RPCs and Web UI (Not directly configurable in Cloudera Manager) | Yes |

| Flume | TLS (Avro RPC) | Yes |

| HBase | SASL - For web interfaces, inter-component replication, the HBase shell and the REST, Thrift 1 and Thrift 2 interfaces | Yes |

| HiveServer2 | SASL (Thrift), SASL (JDBC), TLS (JDBC, ODBC) | Yes |

| Hue | TLS | Yes |

| Impala | TLS or SASL between impalad and clients, but not between daemons | |

| Oozie | TLS | Yes |

| Pig | N/A | Yes |

| Search | TLS | Yes |

| Sentry | SASL (RPC) | Yes |

| Spark | None | Yes |

| Sqoop | Partial - Depends on the RDBMS database driver in use | Yes |

| Sqoop2 | Partial - You can encrypt the JDBC connection depending on the RDBMS database driver | Yes |

| ZooKeeper | SASL (RPC) | No |

| Cloudera Manager | TLS - Does not include monitoring | Yes |

| Cloudera Navigator | TLS - Also see Cloudera Manager | Yes |

| Backup and Disaster Recovery | TLS - Also see Cloudera Manager | Yes |