Cloudera and NVIDIA make AI easier and faster

Organizations today are navigating an increasingly complex data landscape, striving to unlock the full potential of AI and machine learning. The collaboration between Cloudera and NVIDIA is designed to accelerate and simplify the development, deployment, and operation of AI, particularly generative AI, across vast and sensitive data estates.

Why Cloudera + NVIDIA?

The collaboration between Cloudera and NVIDIA focuses on integrating NVIDIA accelerated compute and AI software into Cloudera's AI platform to accelerate enterprise AI. Cloudera’s AI platform and NVIDIA accelerated compute and AI software empower enterprises to transform data into actionable insights and deploy generative and agentic AI use cases, while driving faster innovation to maintain a competitive advantage in a rapidly evolving landscape.

About NVIDIA

NVIDIA is tackling challenges no one else can solve. NVIDIA's work ignited the era of modern AI and is fueling industrial digitalization across markets.

Key Highlights

CATEGORY

Independent Hardware Vendor (IHV)

Website

Reference Architectures

Partnership Highlights

- April 12, 2021: Collaboration to accelerate data analytics and AI at scale by integrating the NVIDIA RAPIDS Accelerator for Apache Spark 3.0 into Cloudera Data Platform.

- March 19, 2024: Cloudera announced an expanded collaboration with NVIDIA to integrate NVIDIA NIM microservices into Cloudera Machine Learning (now called Cloudera AI) to enhance generative AI capabilities.

- October 8, 2024: Cloudera announced the general availability (GA) of its AI Inference service, powered by NVIDIA NIM microservices, to accelerate generative AI deployments for enterprises.

- November 25, 2024: Cloudera announced that Mercy Corps was using its AI Inference service, powered by NVIDIA NIM microservices, for their Tech to the Rescue “AI for Changemakers” program on Amazon Web Services (AWS).

- August 7, 2025: Cloudera AI Inference service on premises launches in Technical Preview as part of the GA release of Cloudera Data Services 1.5.5.

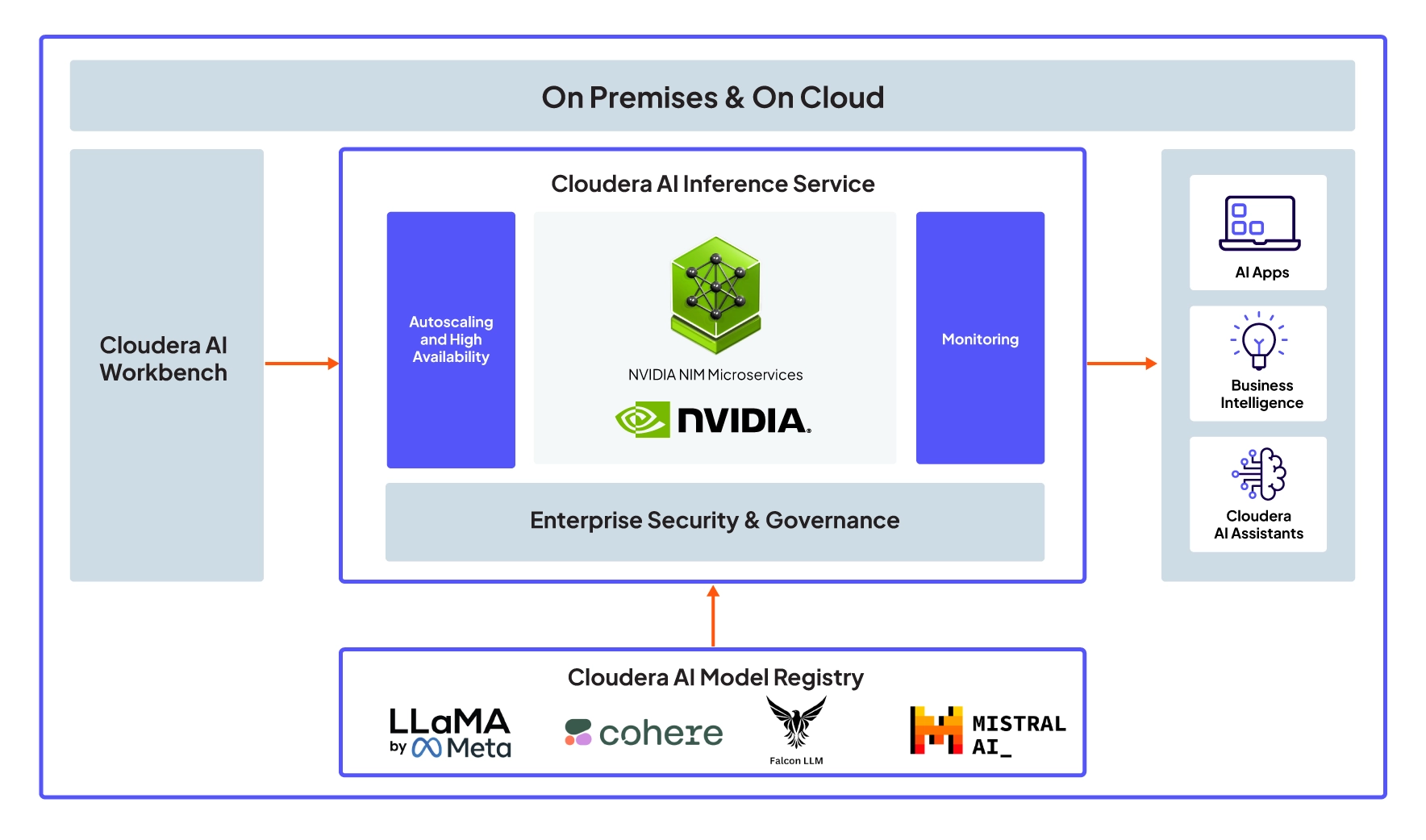

How Cloudera and NVIDIA deliver private AI to customers

Cloudera champions private AI, empowering organizations to build and run AI models and applications using their proprietary data within their security perimeter. This approach ensures data sovereignty and compliance with stringent regulatory requirements.

Cloudera believes in bringing AI compute to data rather than moving data to AI. Cloudera provides a secure and efficient solution for enterprises needing to maintain control over sensitive information. Cloudera AI Inference service, powered by NVIDIA NIM microservices, enables customers to deploy private AI both on the cloud or on premises.

Accelerated compute anywhere

Access to GPUs anywhere-scaled AI workloads on GPUs with a better TCO

Private AI

Deploy apps & model on prem and on public cloud securely within your environment

Any model

Enterprise context for any foundational model, LLM, transformer library

Applications & agents

Build agentic apps with Cloudera AI Workbench and the AI ecosystem

Trusted data: Bring your models to the data AND NOT the data to your models

Cloudera AI Inference service, powered by NVIDIA NIM microservices

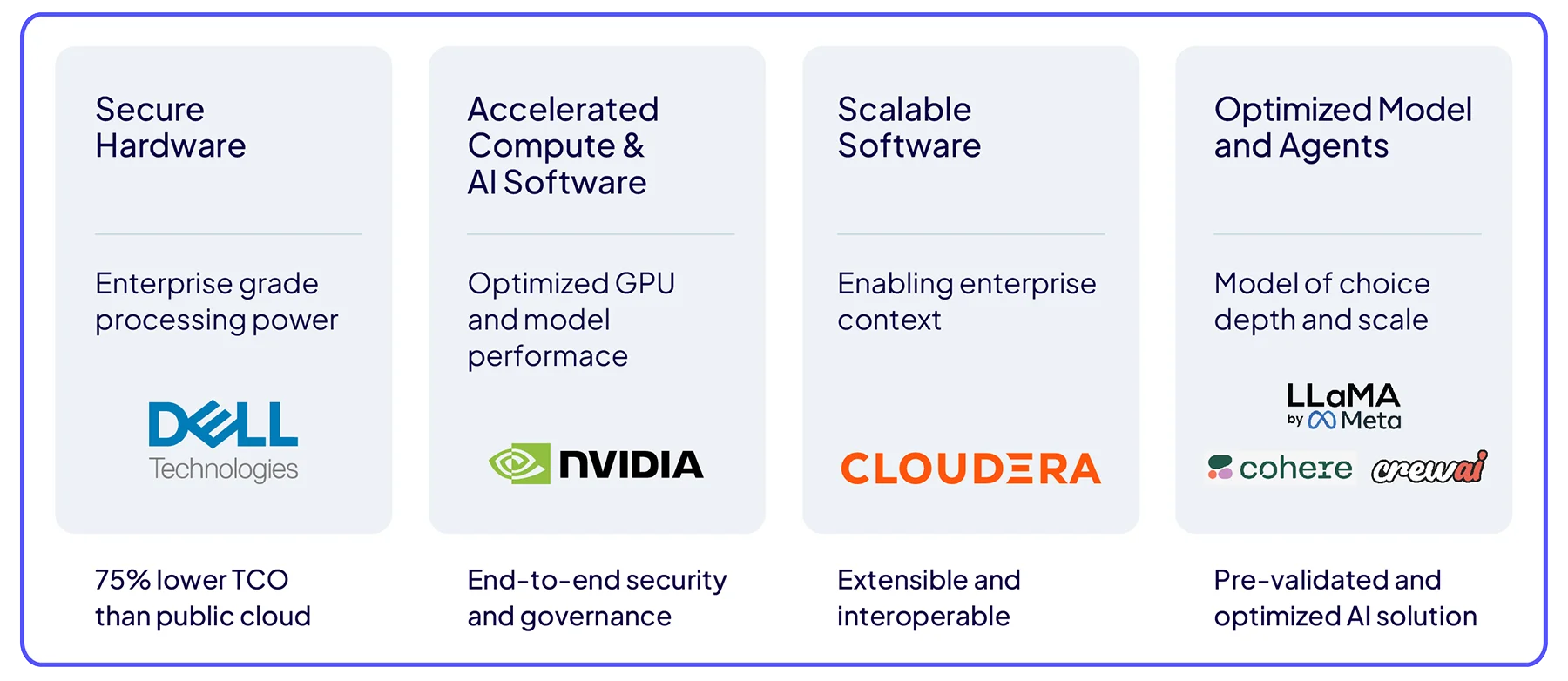

AI in a Box: Delivering private AI on premises

A joint initiative by Cloudera, Dell Technologies, and NVIDIA, AI in a Box is designed to facilitate the rapid and secure deployment of enterprise-grade, private AI on premises. It combines Dell’s robust infrastructure and NVIDIA’s high-performance accelerate compute and AI software with Cloudera’s data platform to deliver a pre-validated, end-to-end AI solution. This approach allows businesses to achieve significant cost savings, accelerated ROI, and enhanced security while scaling AI initiatives efficiently.