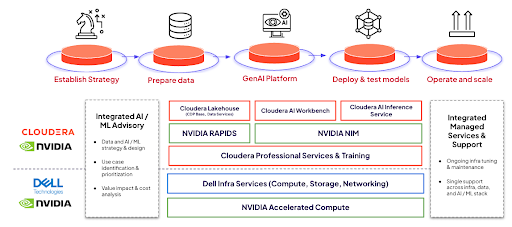

The race to operationalize private AI at enterprise scale isn’t just about models and algorithms—it’s about infrastructure that refuses to compromise. Welcome to the inaugural post of AI in a Box, a three-part blog series that unpacks how Cloudera, Dell Technologies, and NVIDIA are redefining enterprise AI with a turnkey solution that unifies cutting-edge AI-optimized hardware, intelligent data orchestration and AIOps tooling, and zero-trust governance. No more duct-taping legacy systems or gambling on cloud-only “black boxes.”

Cloudera, Dell, and NVIDIA are propelling enterprises into the AI fast lane with a fully managed service that pairs cutting-edge hardware innovation with operational simplicity. This isn’t about squeezing legacy infrastructure to run AI—it’s about unleashing the full potential of your data using the latest high-performance Dell PowerEdge servers, NVIDIA’s accelerated compute, NVIDIA RAPIDS, NVIDIA NIM, and Cloudera AI. -Together, they work to generate effective and efficient data pipelines, all orchestrated as a turnkey Enterprise AI solution.

Speed to Market with Seamless AI Development to Deployment

Accelerated time to value starts at the silicon layer. Dell PowerEdge servers, equipped with NVIDIA accelerated compute, NVIDIA RAPIDS, and NVIDIA NIM, provide the high-performance foundation required for today’s most demanding AI workloads—whether contextualizing billion-parameter models on proprietary data or delivering low-latency inference at scale.

Cloudera AI, built on this foundation and delivered as a fully managed service, eliminates operational complexity. It automatically provisions GPU clusters for tasks such as fine-tuning domain-specific LLMs or powering real-time RAG pipelines, then dynamically reallocates resources to maximize efficiency.

The result? No more infrastructure sizing guesswork or compatibility issues—just a seamless private AI journey from development to deployment.

The speed advantage extends to pre-integrated AI blueprints. A financial institution rolls out fraud detection models in days, not months, using pre-optimized workflows that plug directly into existing transaction systems. A manufacturer activates predictive maintenance by training on sensor data stored in Dell’s high-performance storage, with Cloudera auto-scaling GPU resources as demand spikes. These aren’t generic templates but battle-tested pipelines refined across various verticals, all while enforcing zero-trust security and granular governance.

Comply with Emerging Regulations by Securing Sensitive Data Across the Private AI Lifecycle

Security and compliance are engineered into AI in a Box, ensuring data protection and regulatory adherence without sacrificing agility. The solution employs hardware-enforced isolation (via NVIDIA MIG technology) and Cloudera’s unified governance to secure sensitive data across the AI lifecycle. Healthcare, finance, and government sectors benefit from HIPAA-compliant diagnostics, air-gapped underwriting models, and immutable audit trails to meet local and global compliance requirements.

For financial services, the stack accelerates AML/BSA compliance with AI-driven transaction monitoring, anomaly detection, and real-time reporting. It aligns with EU AI Act, SEC, and FCA regulations through continuous monitoring, bias mitigation tools, and explainable AI workflows. Data residency controls and zero-trust architecture address GDPR/CCPA mandates, while end-to-end audit trails ensure transparency for credit risk or fraud detection models.

Proactive threat detection, automated incident response, and adherence to Basel Committee-aligned frameworks assist in minimizing breaches and regulatory risks. AI in a Box enables institutions to scale AI confidently, allowing enterprises to transform regulatory complexity into a source of competitive advantage. Managed updates and patching enable teams to strike the right balance between innovation and production-scale operations.

Maximize Cost Efficiency with Scalable and Extensible AI

By colocating AI compute with on-premises data lakes, Dell’s scalable storage keeps petabytes local. The solution dodges the latency penalties and egress fees of cloud-centric AI. NVIDIA’s latest GPUs slash training times by up to half compared to prior generations, while Cloudera’s policy-driven autoscaling ensures resources align perfectly with workload demands.

The result? Optimized Private AI workload economics with a predictable cost base that transitions AI from experimental sandbox environments to a core value engine driving enterprise outcomes.

But the real game-changer is agility. Enterprises pivot on a dime: today’s customer churn model becomes tomorrow’s supply chain optimizer, all on the same infrastructure. The full-stack integrated AI software accelerates everything from hybrid data pipeline management all the way to serving up model endpoints, while Cloudera’s data lineage built into the Shared Data Experience (SDX) stack tracks each of these endpoints to its source—critical for audits in regulated industries. Dell’s infrastructure, always forward-compatible, ensures seamless adoption of next-gen chipsets without costly re-architecture, maintaining effective sustainability within the data center.

Experience the Future of Private AI

At its core, AI in a Box is silicon-to-inference synergy. Dell PowerEdge servers, armed with NVIDIA H100 GPUs and NVLink-switched topology, deliver FP8-precision performance for trillion-parameter training and real-time RAG pipelines. Kubernetes-driven orchestration auto-provisions GPU clusters, dynamically allocating resources to tasks like fine-tuning Mistral-7B models or parallelizing MONAI medical imaging workflows. Cloudera’s Data Fabric unifies streaming and batch ingestion into optimized Parquet sinks, while SDX enforces strong data access control and granular governance—tracking lineage from raw data to model predictions.

This is enterprise AI stripped of excuses. No more waiting for cloud migrations, wrestling with fragmented tools, or risking compliance for innovation. With Cloudera AI, Dell infrastructure offered as a managed service, and NVIDIA accelerated compute and NVIDIA NIM, you’re not just deploying AI—you’re operationalizing it. Fast. Secure. Future-proof.

Reimagine Possibilities with AI in a Box

Schedule a Consultation: Ready to transform your AI strategy after DTW? Connect with us to continue the conversation, schedule a custom workshop, or kick off a pilot initiative to drive measurable outcomes for your business.