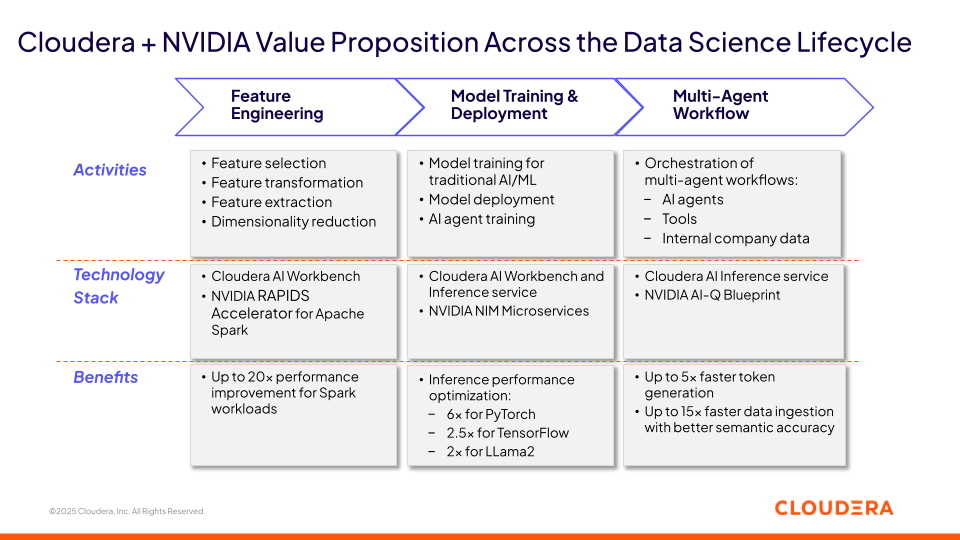

Cloudera and NVIDIA enable organizations to streamline complex data pipelines at scale by combining Cloudera’s data management capabilities with NVIDIA’s full-stack services:

Data processing Apache Spark on Cloudera and NVIDIA RAPIDS Accelerator for Apache Spark streamlines execution of feature engineering and data engineering workloads.

AI/ML model deployment with Cloudera AI Inference and NVIDIA NIM microservices improves the throughput and latency performance of artificial intelligence (AI) models (both traditional AI/ML and generative AI) .

Agentic AI orchestration with NVIDIA AI-Q Blueprint enables the integration of AI agents with private data and the interaction with other systems through APIs.

Figure 1: Cloudera and NVIDIA deliver value across the data science lifecycle

In this blog, we will highlight three use cases that showcase how, together, Cloudera and NVIDIA deliver value with analytics and AI for financial services institutions..

NVIDIA RAPIDS Accelerator for Apache Spark for AML/KYC Compliance

The anti-money laundering and know your customer (AML/KYC) compliance lifecycle in large financial organizations is a highly compute-intensive process. This is due to the need to integrate and standardize vast volumes of data across various activities, such as:

Entity resolution, which requires the standardization of cross-border data subject to different data clearance processes and sourced from a wide range of transactional systems and external entities (such as credit card transactions, wire transfers, and SWIFT messages).

Data consolidation from multiple AML/KYC systems that store information in different formats, which must be normalized into a unified schema and structured into data products (such as cross-business-unit AML data marts).

Ongoing transaction monitoring and regulatory reporting that require data processing, enrichment, and the application of rules.

For many Cloudera customers who have implemented AML/KYC use cases, Apache Spark plays a pivotal role in enabling these analytic workloads. Apache Spark is a powerful engine for data engineering, providing capabilities like in-memory computing and distributed processing. However, the surge in transaction volumes and the increasing variety of new data sources for AML/KYC compliance place additional strain on existing compute infrastructure, demanding even greater performance.

The NVIDIA RAPIDS library for Apache Spark offloads specific data processing operations from CPU to GPU in a transparent manner, meaning without any code modifications. As a result, Cloudera customers have experienced performance improvements of up to 20x by using the NVIDIA RAPIDS library for Apache Spark 3.0 workloads.

NVIDIA NIM Microservices for Fraud Prevention in Payments

Two of the greatest challenges in fraud prevention are the explosion in transaction volumes in digital and credit card payments and the increasing sophistication of fraud techniques. These factors have led to resource contention and scalability challenges for AI/ML inference, necessitating the deployment of multiple composable AI/ML models to address emerging fraud methods.

To tackle these challenges, the Cloudera AI Inference service includes NVIDIA NIM that are designed to deliver high-performance, low-latency, and high-throughput inference for fraud prevention AI models on NVIDIA accelerated computing. For example, by using NVIDIA NIM, Cloudera AI Inference service can deliver up to 6x performance improvement for PyTorch models (using the Torch-TensorRT library) and a 2.5x improvement for TensorFlow models (using the TF-TensorRT library), both of which are widely used in payments fraud prevention.

In addition, the Cloudera AI Inference service accelerates inference requests executed on NVIDIA accelerated computing by leveraging NVIDIA’s dynamic batching feature. This feature enables the combination of server-side inference requests, avoiding the inefficiency of processing one request at a time, which leaves much of the GPU idle. As a result, the Cloudera AI Inference service with NVIDIA NIM improves GPU utilization, reducing future GPU capital expenditures to meet growing demands for fraud prevention.

NVIDIA AI-Q Blueprint for Loan Origination in Retail Banking

Credit underwriting is an important capability in banking, spanning many different lending activities such as mortgages, credit card lending, commercial banking, and trade finance. These processes have historically been inefficient given the number of activities involved in the origination process, from application submission to funding, and the numerous roles participating in the decision process.

While traditional AI/ML models can streamline many individual activities in the loan origination workflow, the process from the customer’s perspective still feels slow and fragmented. This is where agentic AI can have a significant impact: in this context, agentic AI can reduce the effort required to collect, summarize information, and draft credit decisions. It can also deliver a personalized and consistent lending experience by standardizing reviews during the approval process. Additionally, it can deliver personalized product recommendations based on the customer’s behaviors and spending patterns, with a multiple-agent workflow that orchestrates various tools, data, and AI agents.

By leveraging NVIDIA AI-Q Blueprint on NVIDIA accelerated computing with the Cloudera AI Inference service, banking organizations can achieve this transformative vision. For example, by using AI-Q Blueprint, Cloudera can orchestrate a multi-agent workflow that includes a GenAI-based personalized loan advisor deployed on NVIDIA NIM, an AI-based document processing agent leveraging optical character recognition (OCR) and natural language processing (NLP) techniques, and existing credit decisioning tools.

Next Steps

The combined power of Cloudera’s unified, cloud-anywhere data platform and NVIDIA’s hardware and software capabilities offers a holistic solution for the development of agentic AI solutions.

Visit this page to learn more about the Cloudera AI Inference service.

Read this whitepaper by Enterprise Strategy Group to learn about the Cloudera + NVIDIA joint value proposition.