Drive AI development and deployment while safeguarding all stages of the AI lifecycle.

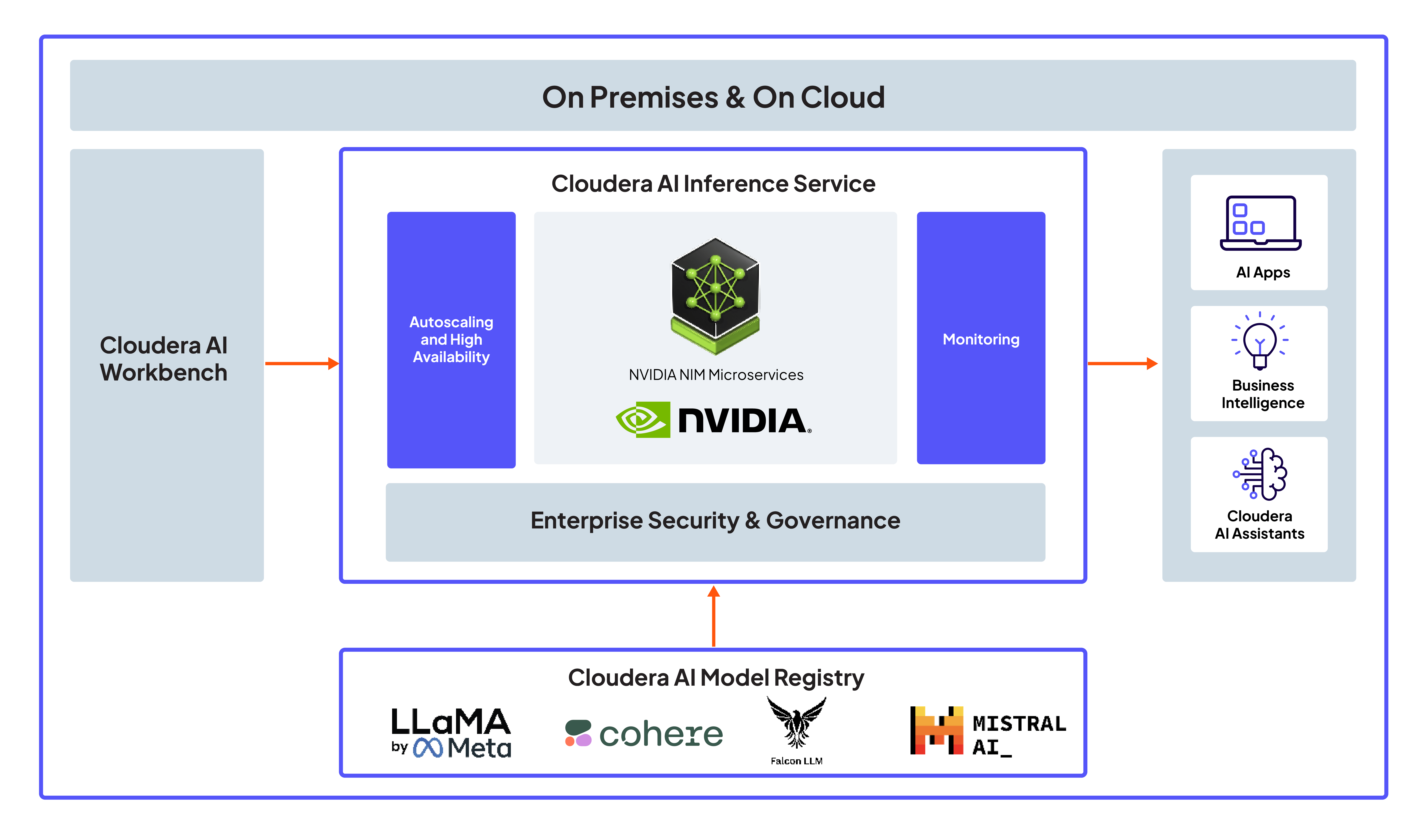

Powered by NVIDIA NIM microservices, the Cloudera AI Inference service delivers market-leading performance—delivering up to 36x faster inference on NVIDIA GPUs and nearly 4x the throughput on CPUs—streamlining AI management and governance seamlessly across public and private clouds.

One service for all your enterprise AI inference needs

One-click deployment: Move your model from development to production quickly, regardless of environment.

One secured environment: Get robust end-to-end security covering all stages of your AI lifecycle.

One platform: Seamlessly manage all of your models through a single platform that handles all your AI needs.

One-stop support: Receive unified support from Cloudera for all your hardware and software questions.

AI Inference service key features

AI Inference service deployment options

Run inference workloads on-premises or in the cloud, without compromising performance, security, or control.

Cloudera on cloud

- Multi-cloud flexibility: Deploy across public clouds, avoid ecosystem lock-ins.

- Faster time to value: Start inferencing without infrastructure setup—ideal for rapid experimentations.

- Elastic scalability: Handle unpredictable traffic with scale-to-zero autoscaling and GPU-optimized microservices.

Cloudera on premises

- Data sovereignty: Retain full control. Keep models, prompts, and assets fully behind your firewall.

- Air-gapped-ready: Built for regulated environments like government, healthcare, and financial services.

- Predictable and lower TCO: Eliminate surprises with fixed pricing and lower TCO compared to token-based cloud APIs.

Experience effortless model deployment for yourself

See how easily you can deploy large language models with powerful Cloudera tools to manage large-scale AI applications effectively.

Model registry integration:

Seamlessly access, store, version, and manage models through the centralized Cloudera AI Registry repository.

Easy configuration & deployment: Deploy models across cloud environments, set up endpoints, and adjust autoscaling for efficiency.

Performance monitoring:

Troubleshoot and optimize based on key metrics such as latency, throughput, resource utilization, and model health.

Take the next step

Explore powerful capabilities and dive into the details with resources and guides that will get you up and running quickly.

AI Inference service product tour

Get an inside look at Cloudera AI Inference service.

AI Inference service documentation

Find everything from feature descriptions to useful implementation guides.

Explore more products

Accelerate data-driven decision making from research to production with a secure, scalable, and open platform for enterprise AI.

Unlock private generative AI and agentic workflows for any skill level, with low-code speed and full-code control.

Bring the power of AI to your business securely and at scale, ensuring every insight is traceable, explainable, and trusted.

Explore the end-to-end framework for building, deploying, and monitoring business-ready ML applications instantly.